Use case walkthrough: User workflow in APEX AIOps Incident Management ►

This video provides a use case walkthrough of what a typical workflow might look like for an Incident Management user as they resolve incidents.

In this video, we will step through the typical workflow of an APEX AIOps Incident Management user as they work through incidents.

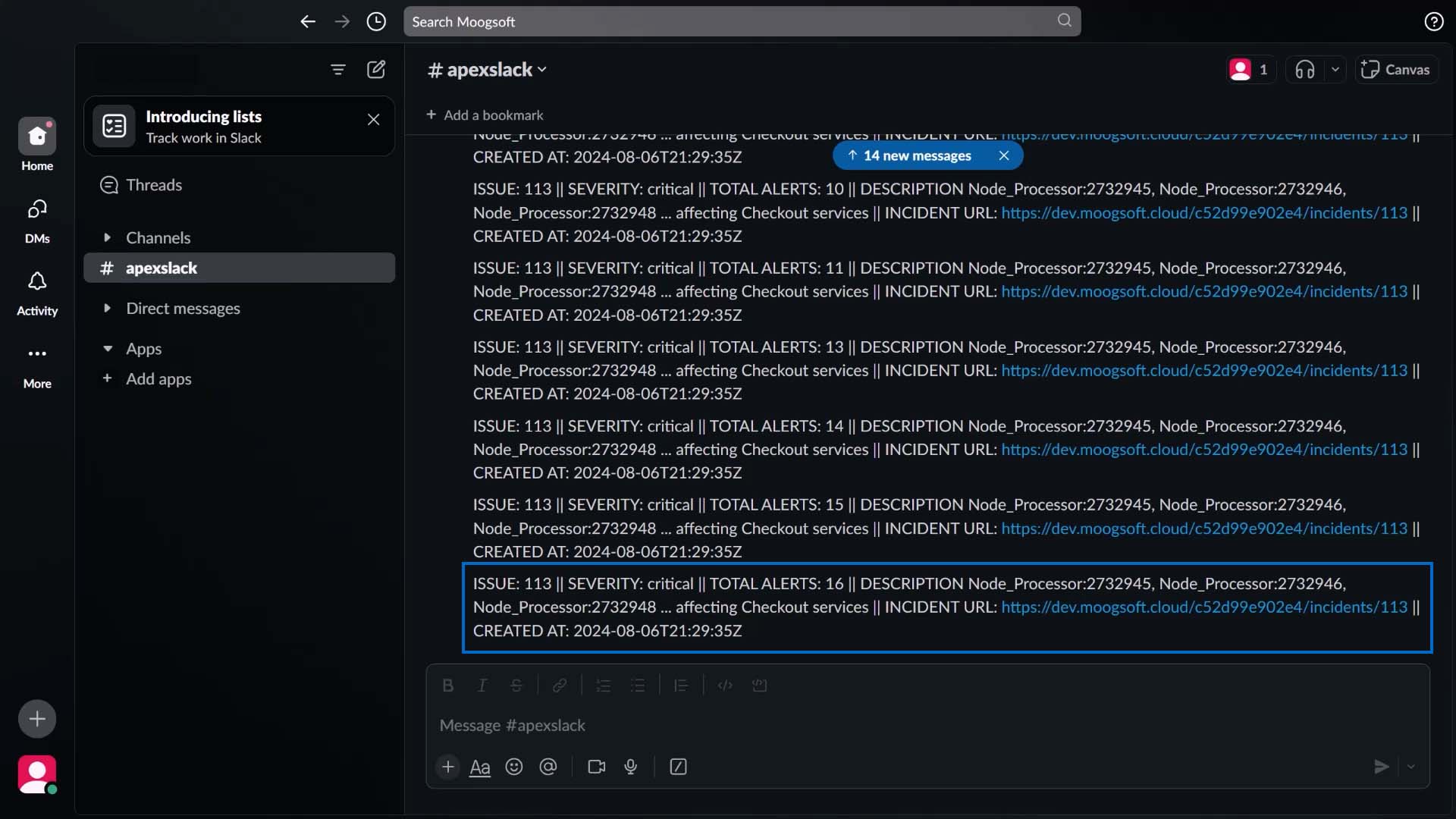

Here comes a slack message, notifying us there’s a critical incident requiring our attention.

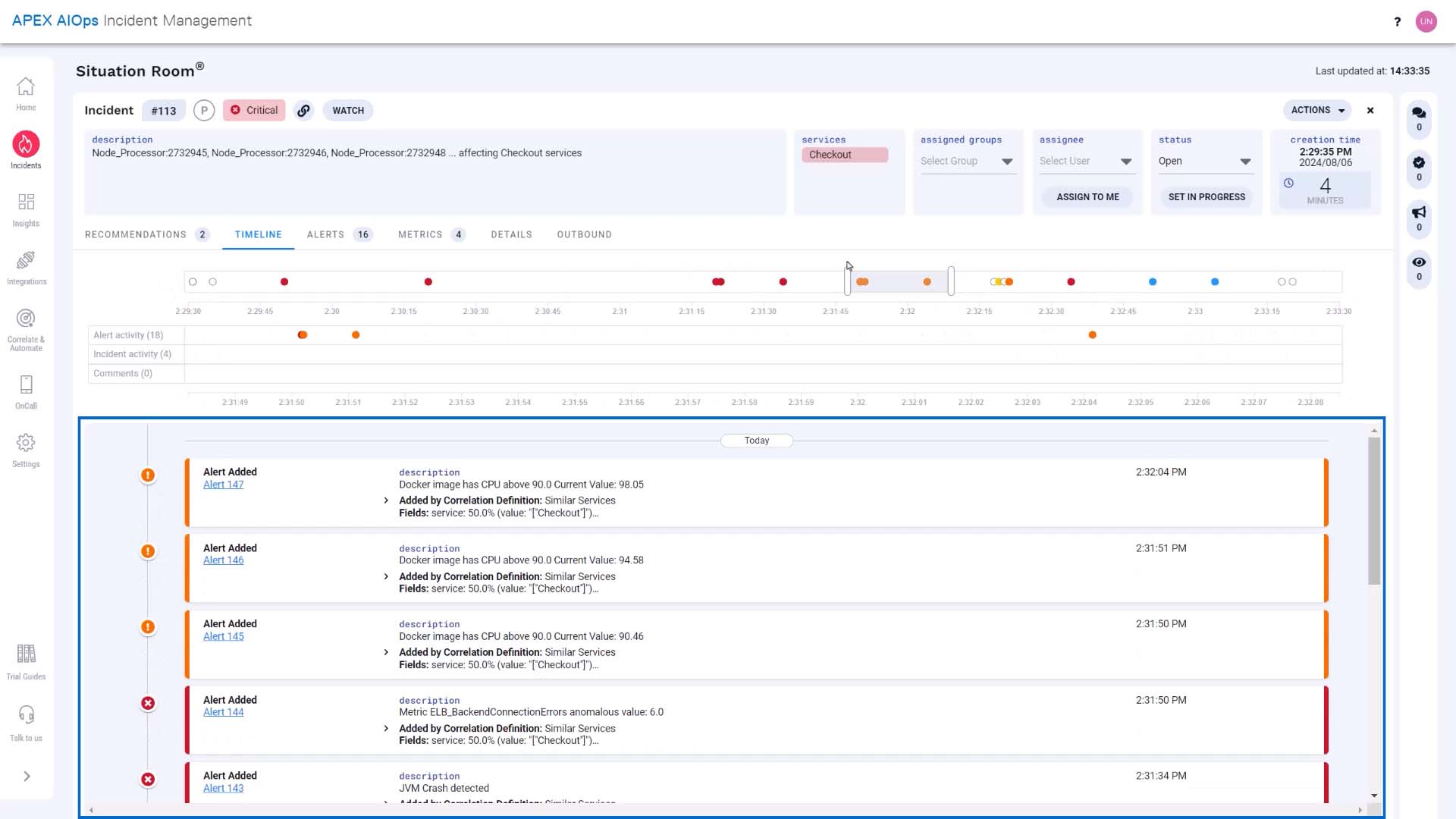

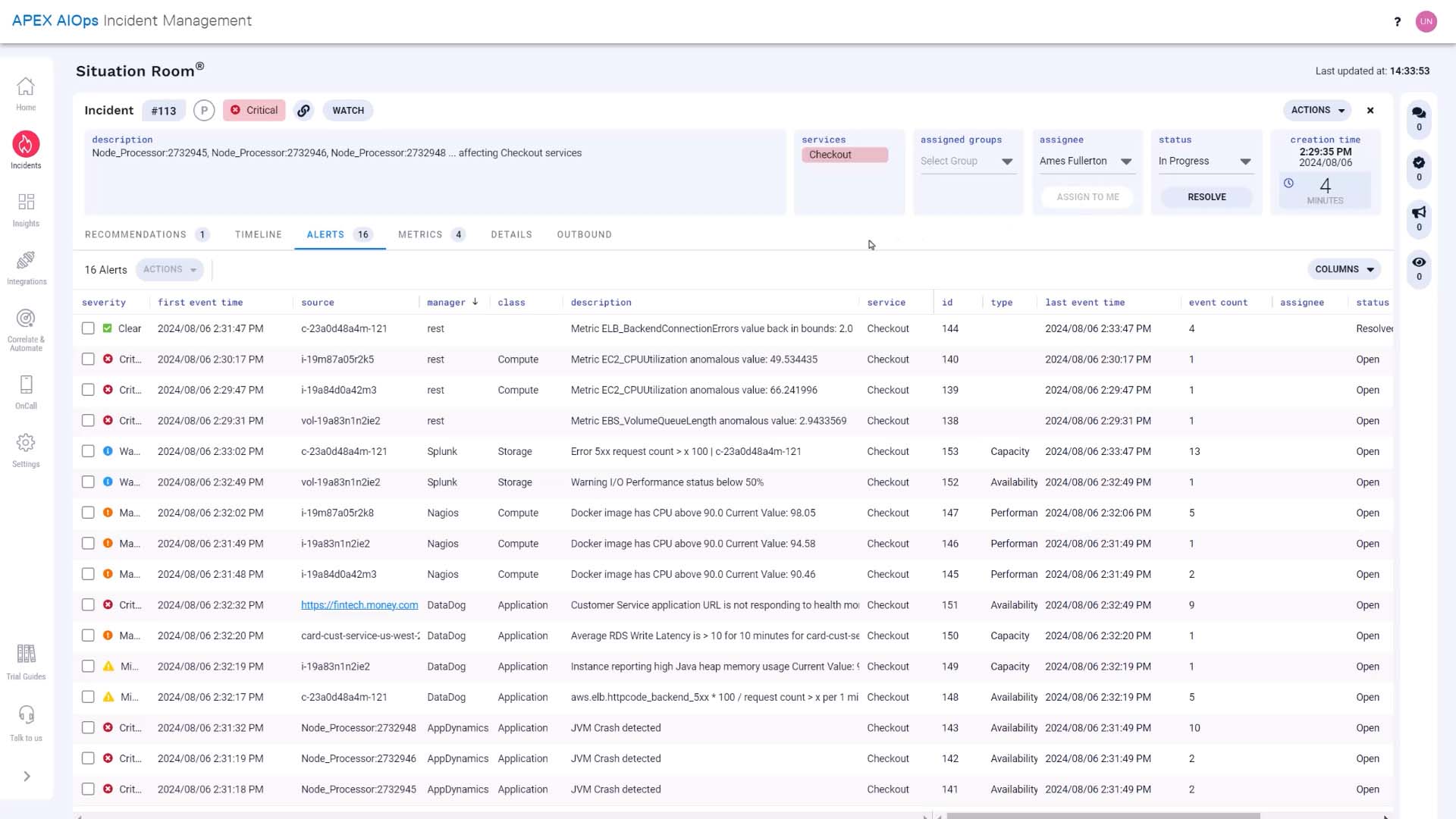

So we click through to Incident Management, which takes us to this incident’s Situation Room.

The Situation Room is where you and your team can collaborate on an incident. This is the timeline for this incident. These sliders let you zoom in on particular areas, and the list below filters to match the time frame you choose.

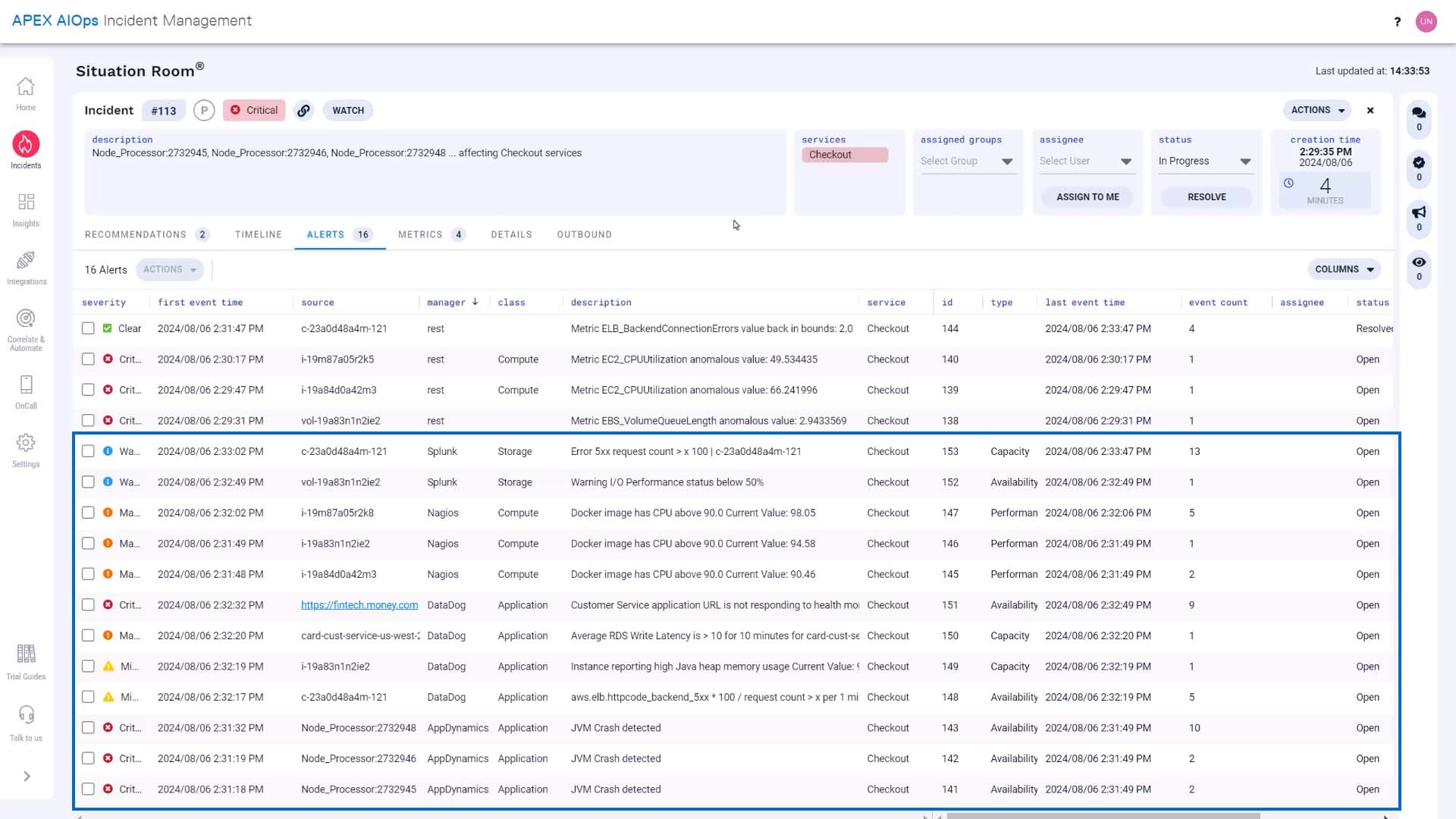

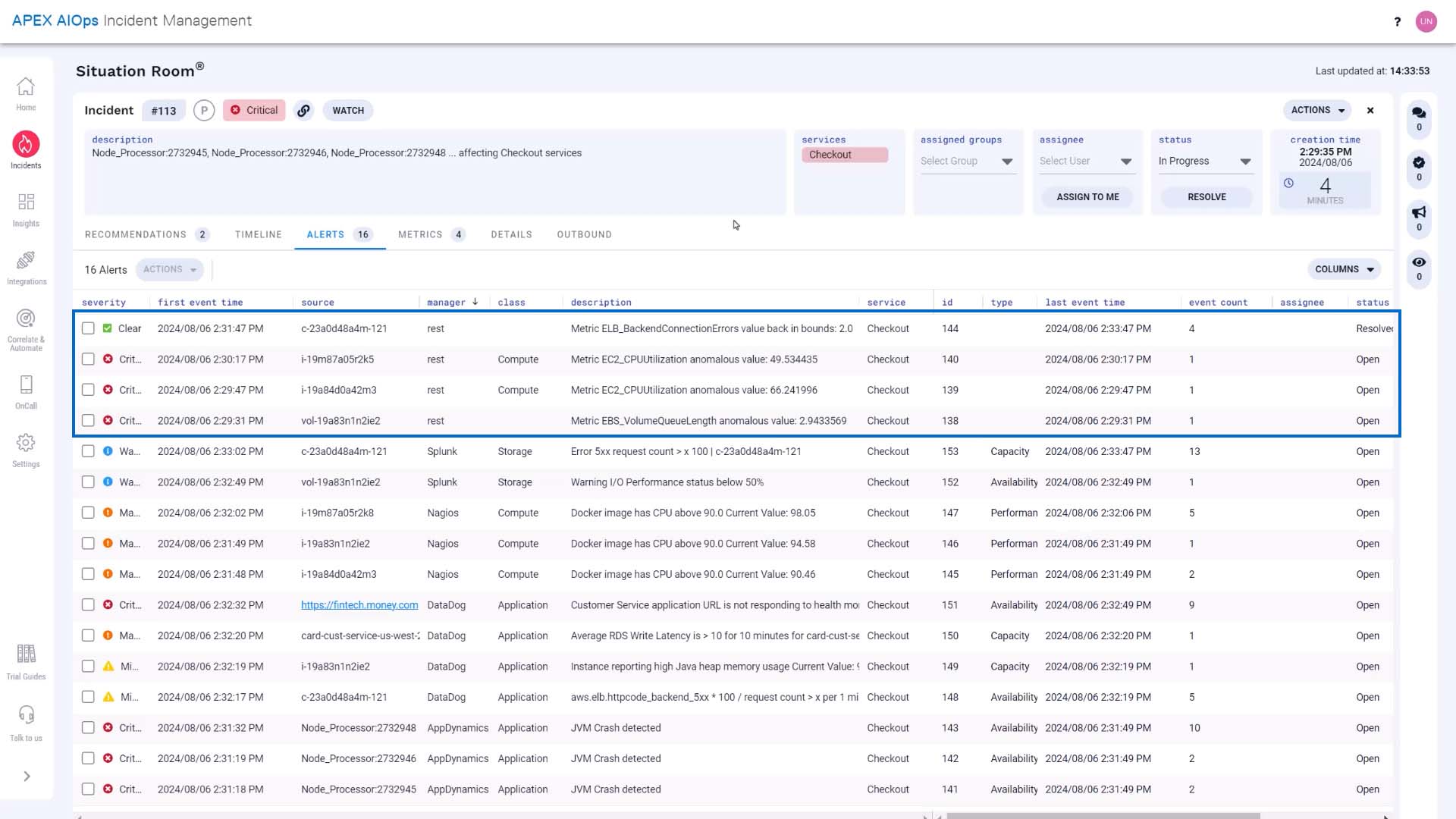

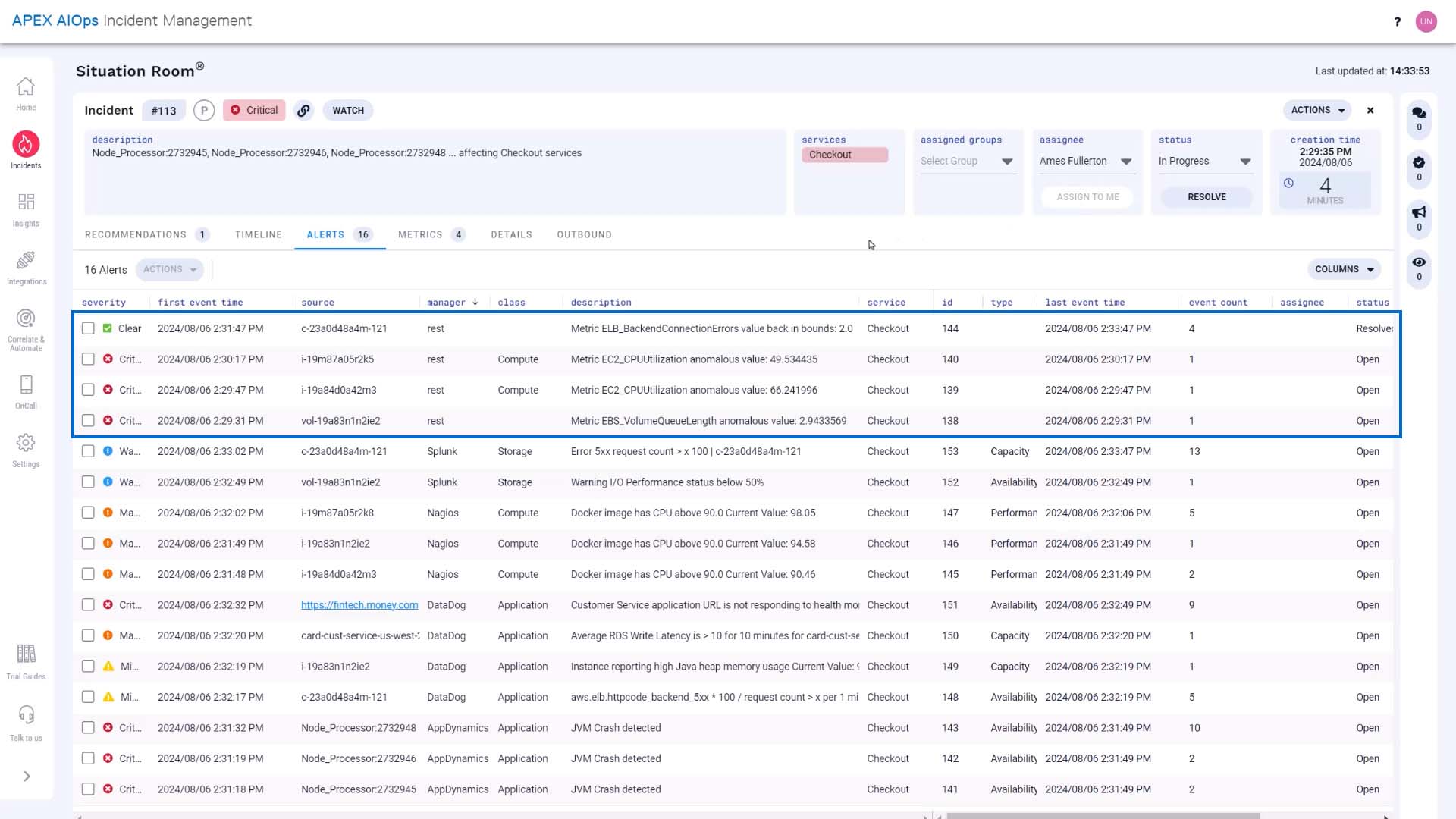

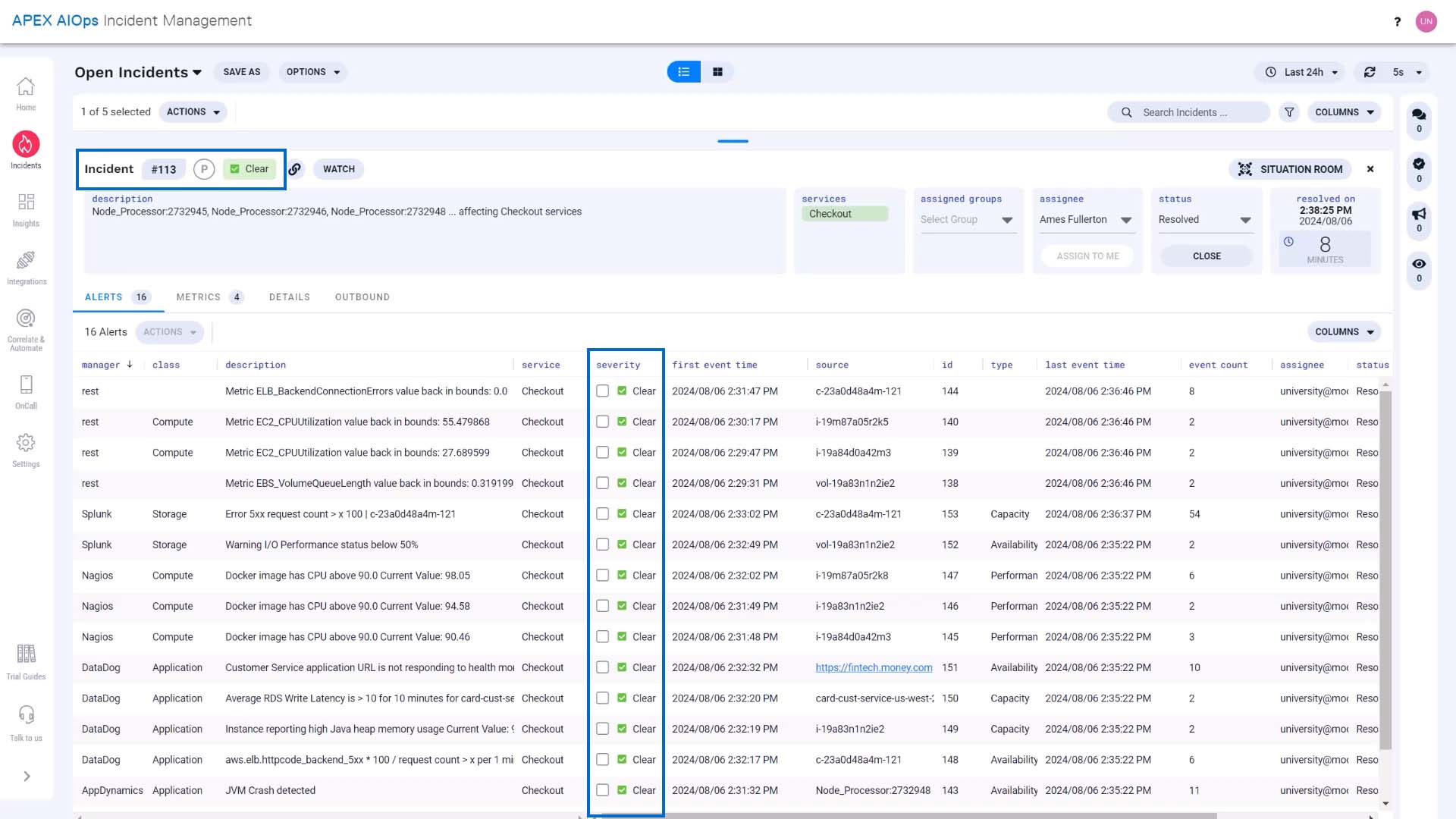

Let’s examine all alerts. These are the alerts that make up this incident. Some of these are alerts from a monitoring system.

And these are alerts generated by Incident Management based on the metrics it is tracking.

We are going to own this incident.

Now we will start our investigation.

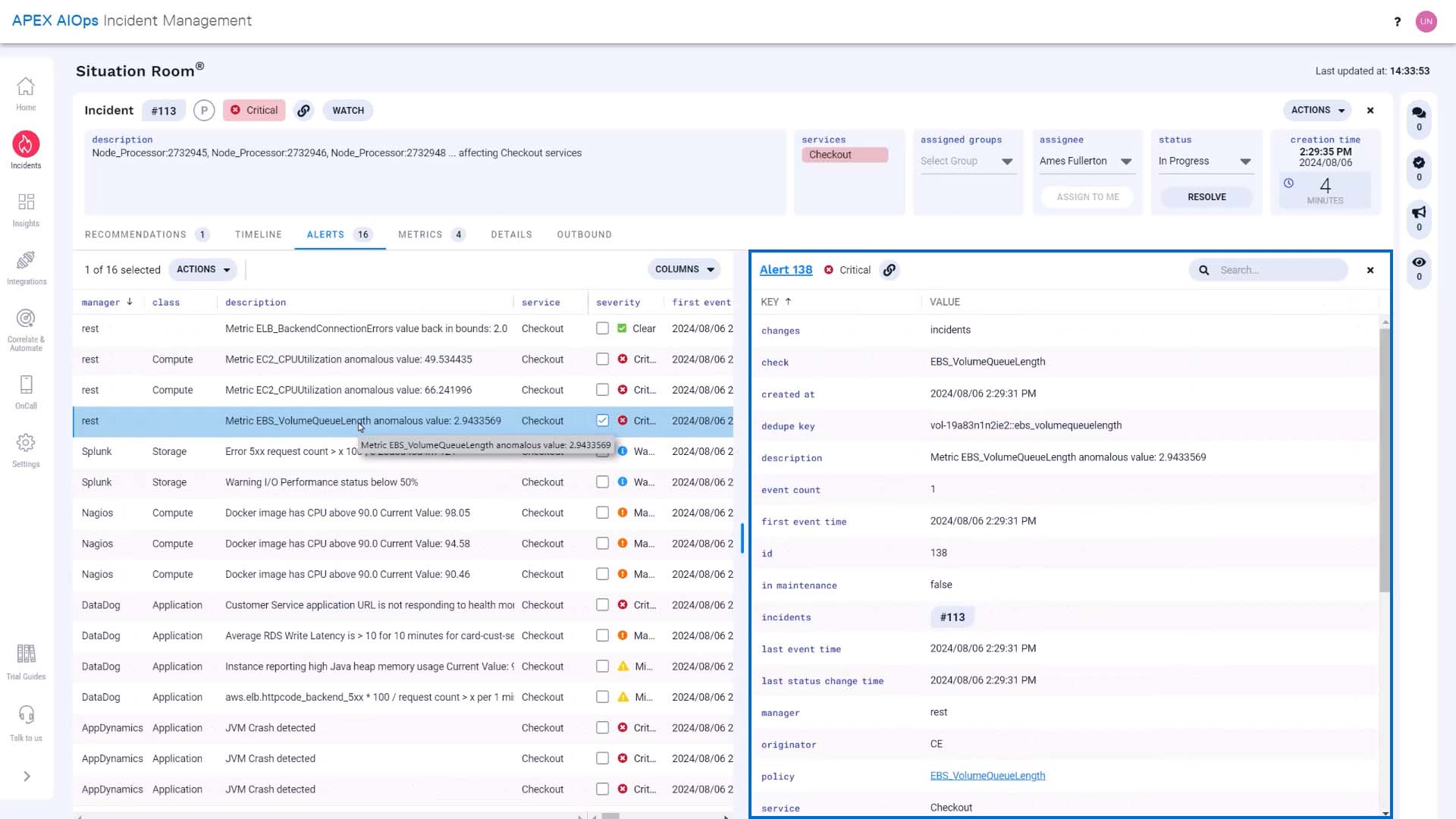

These alerts came in within a few seconds of each other. Let’s look at the details of the alert that first came in.

All attributes of this alert are visible now.

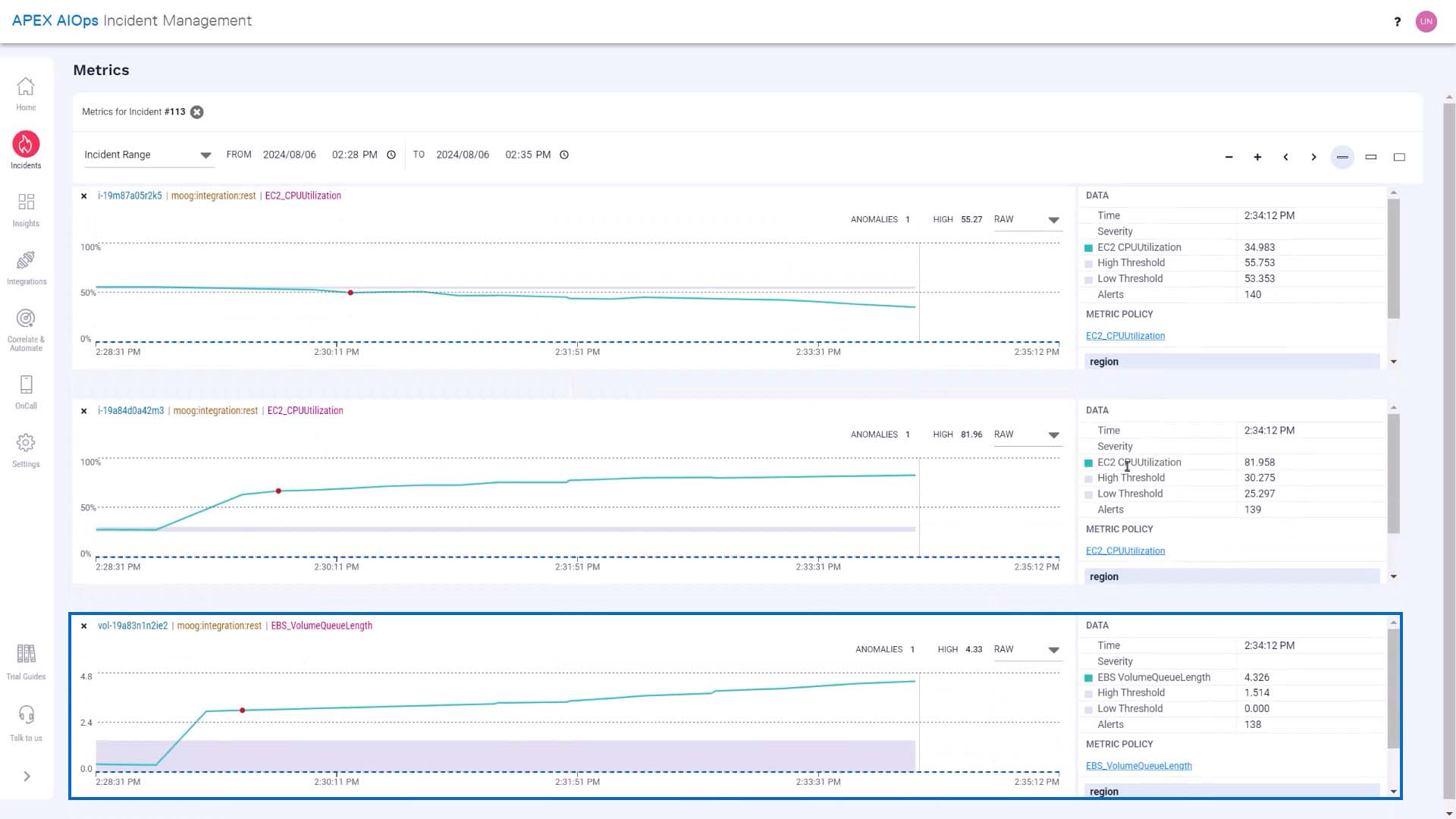

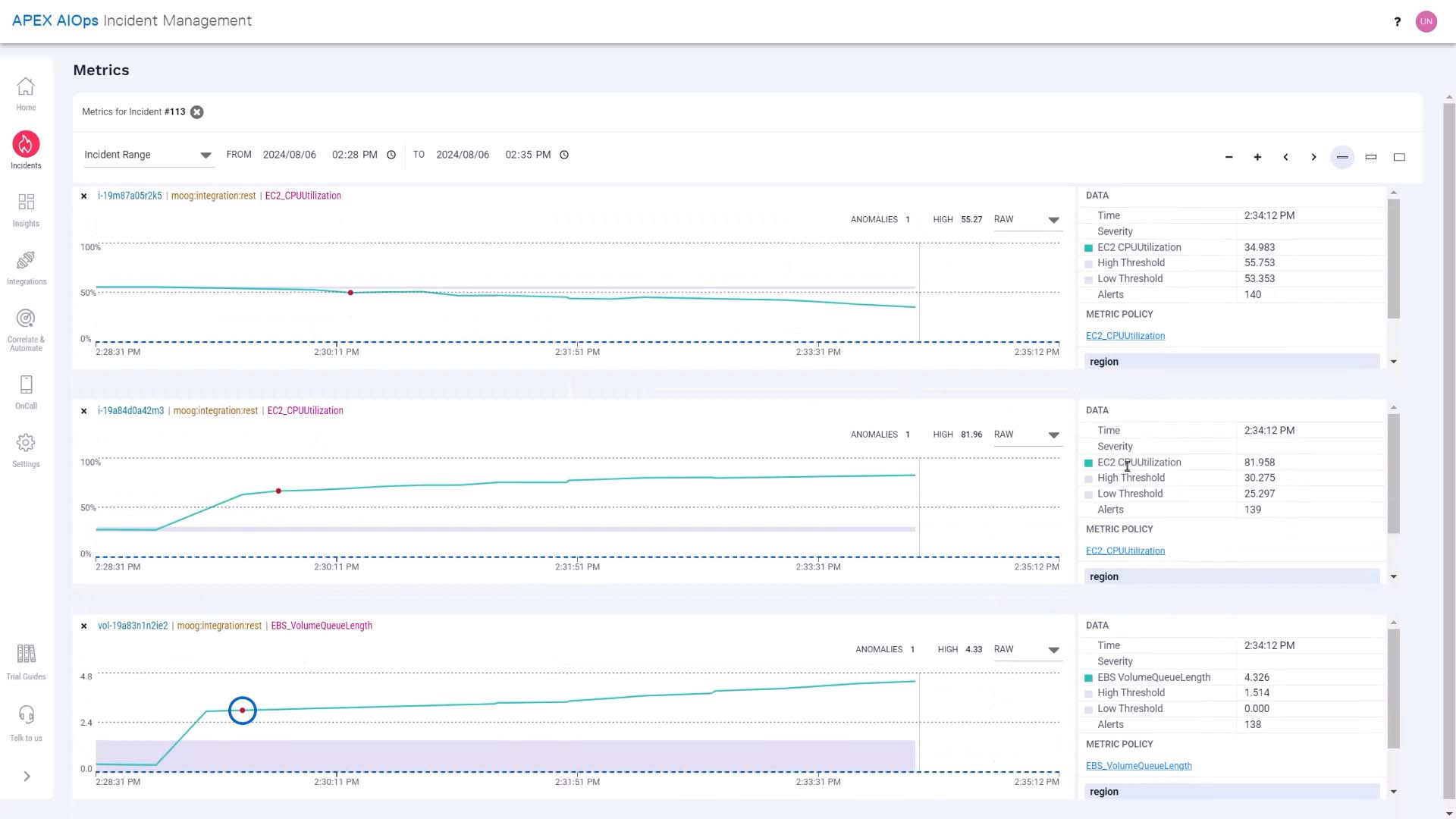

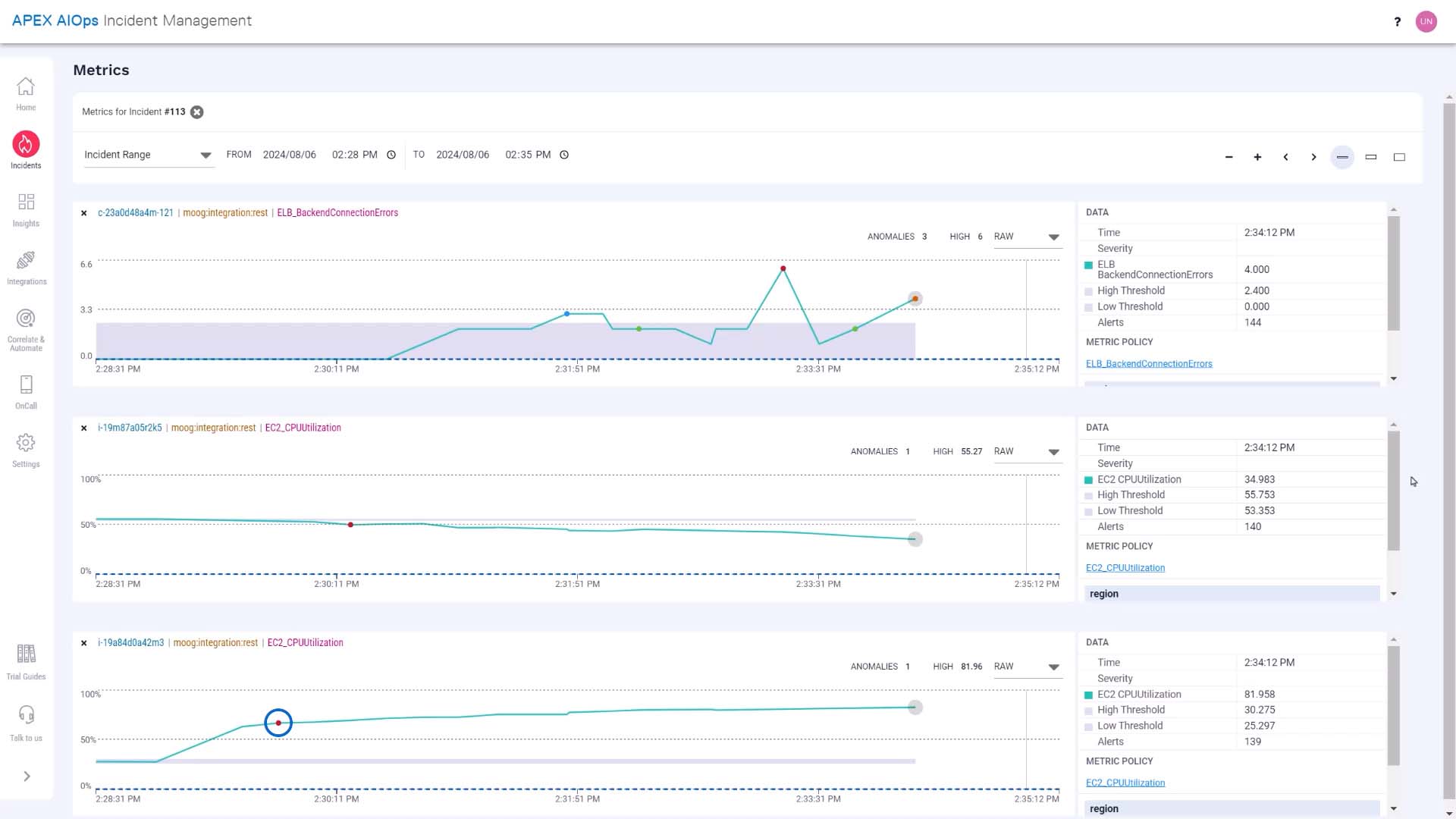

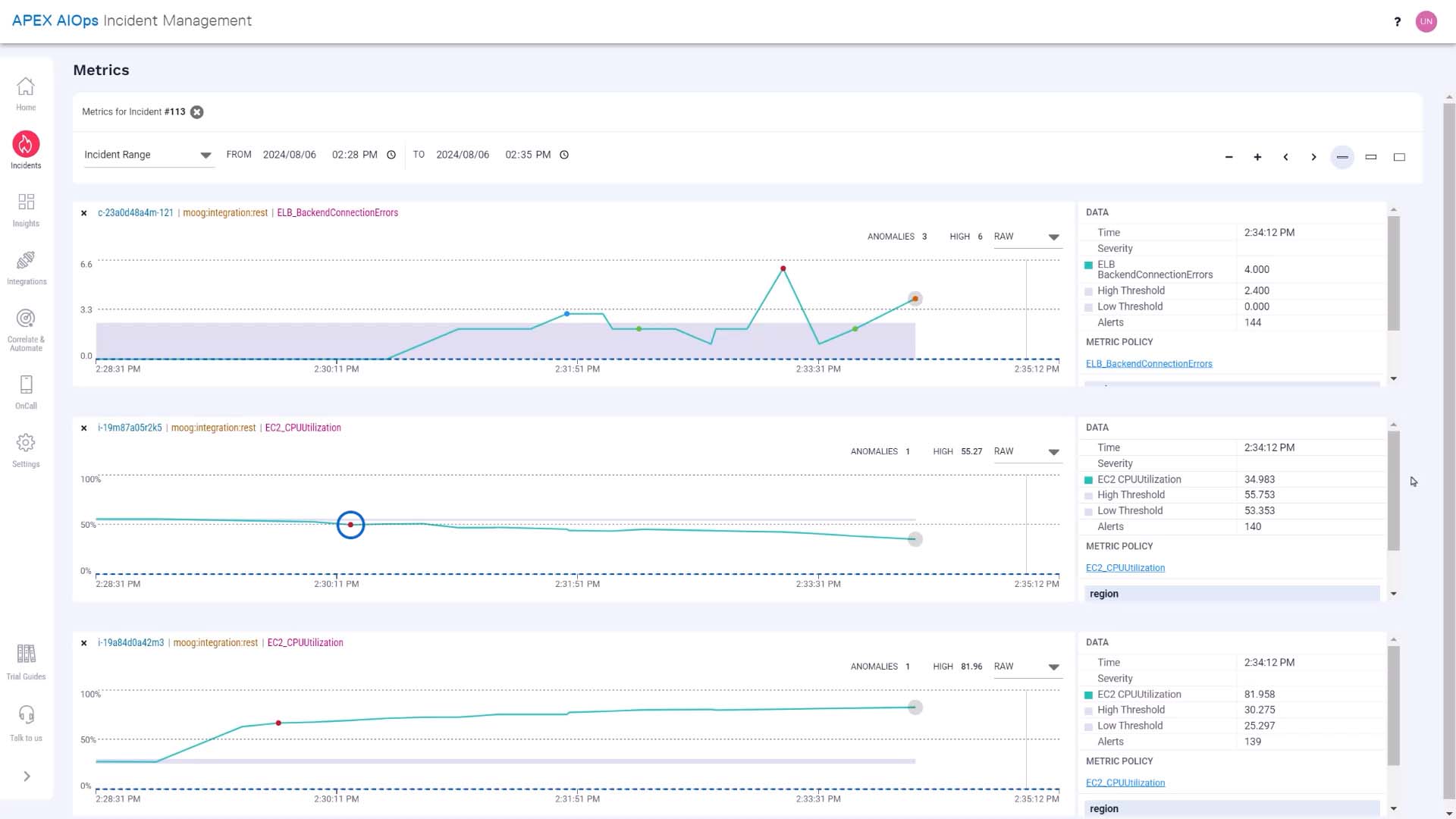

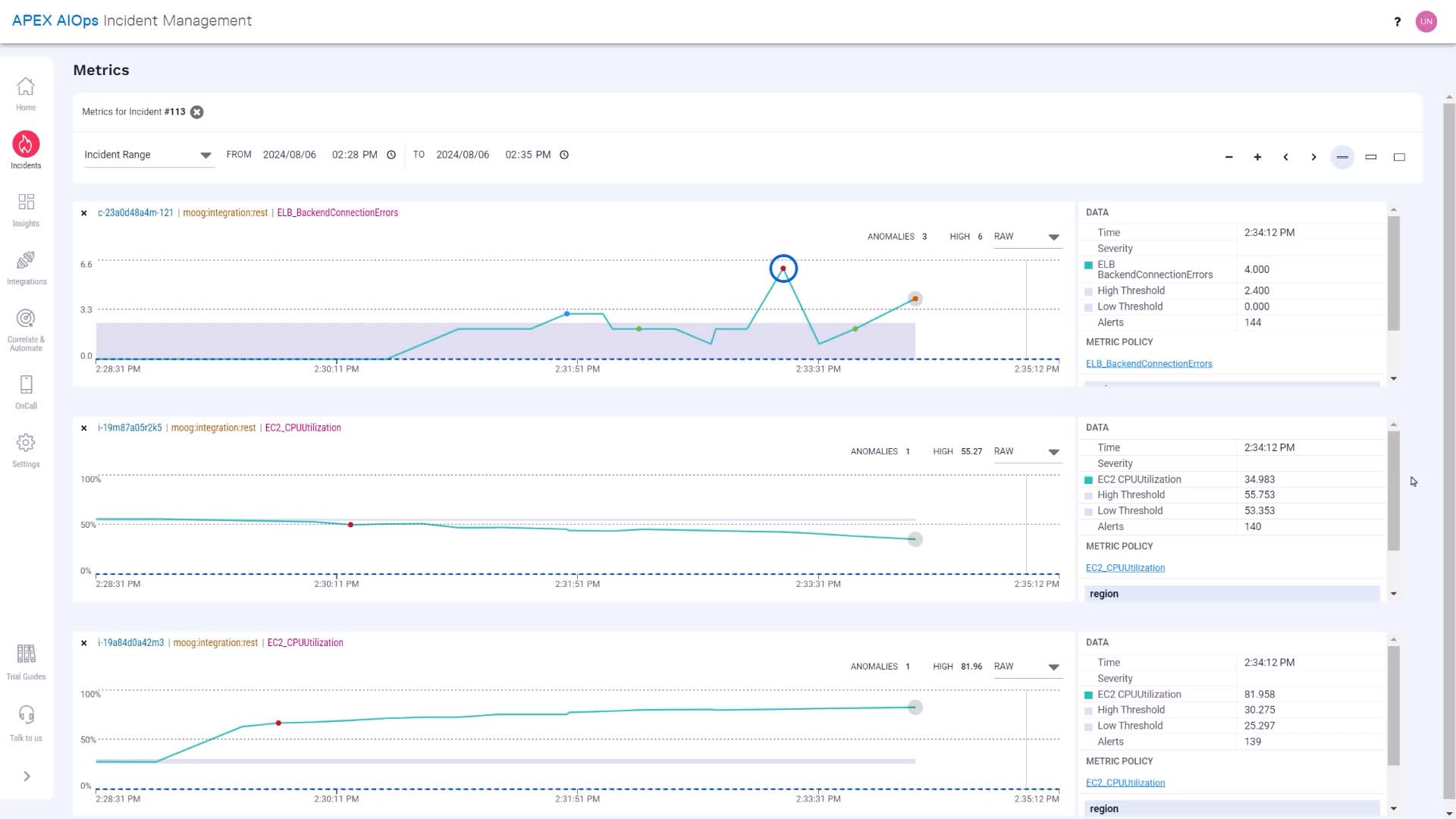

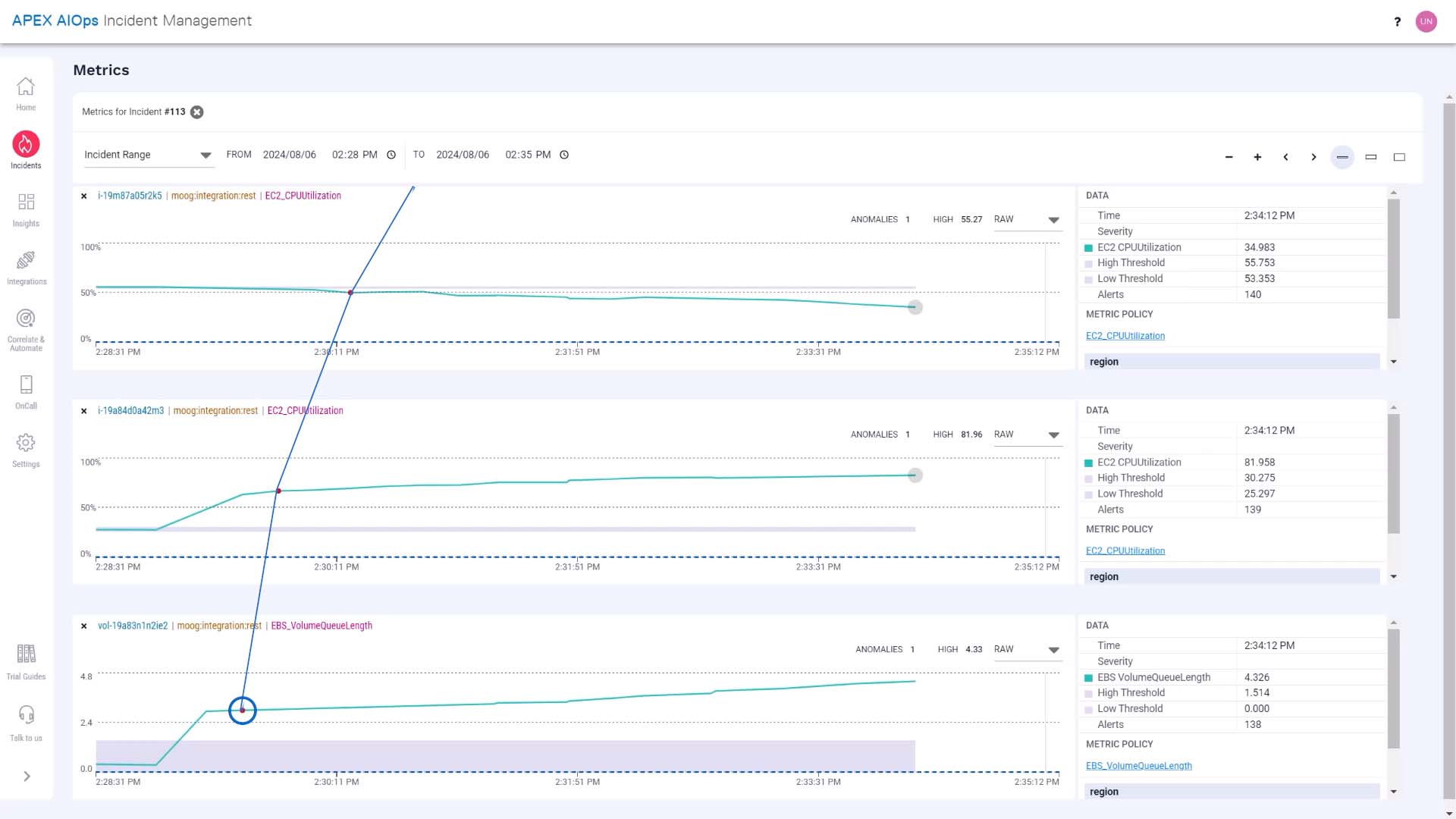

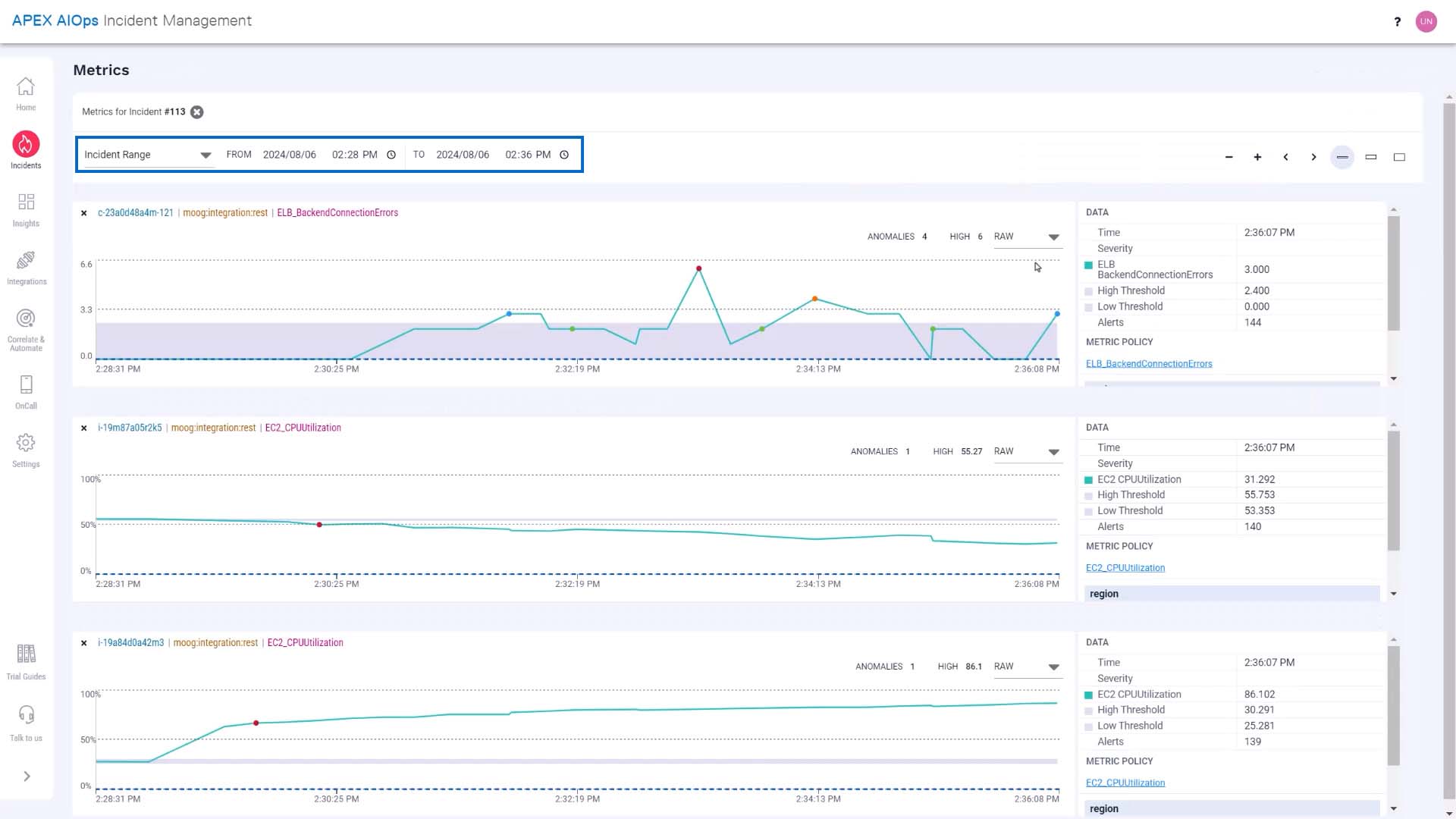

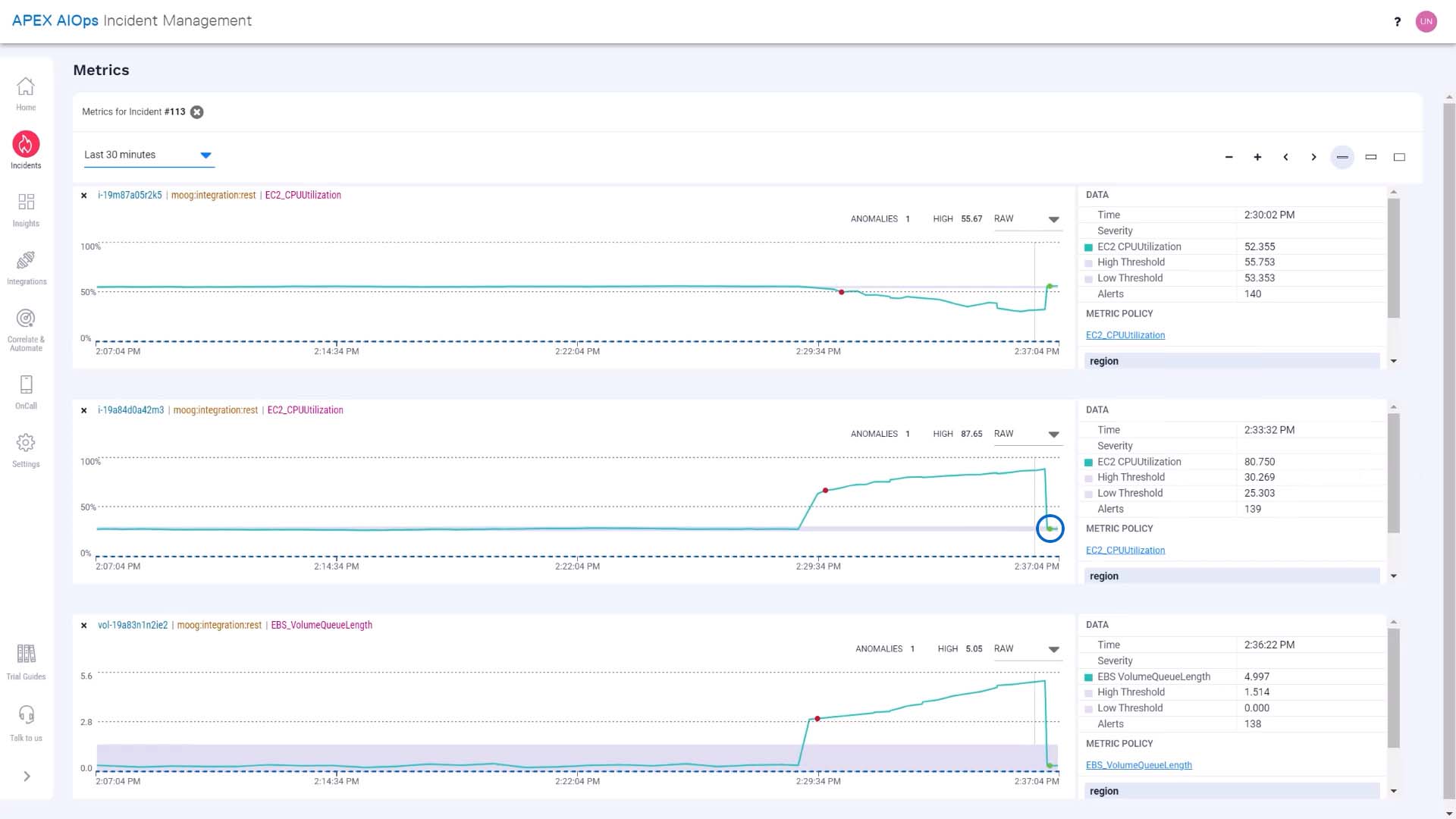

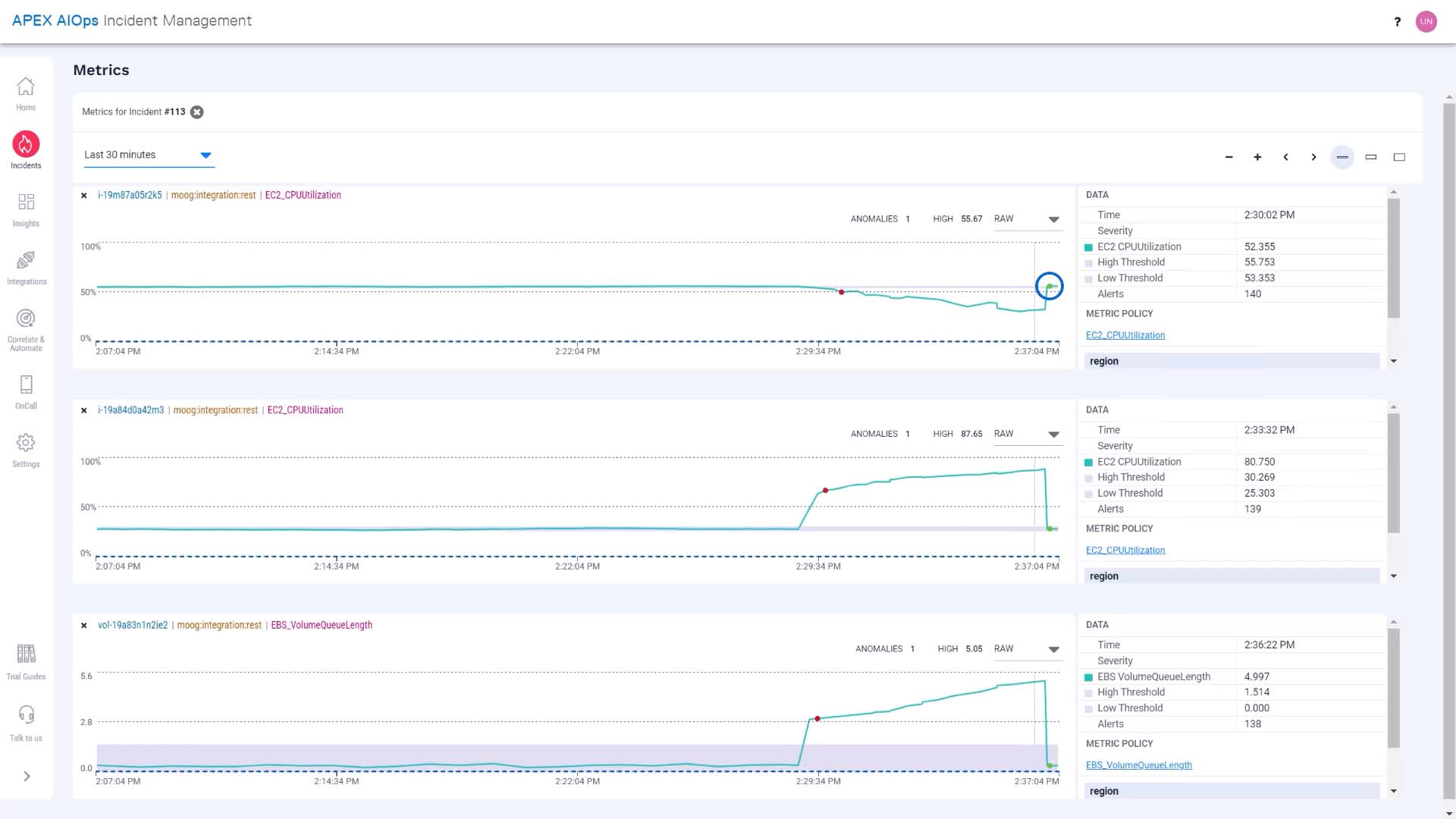

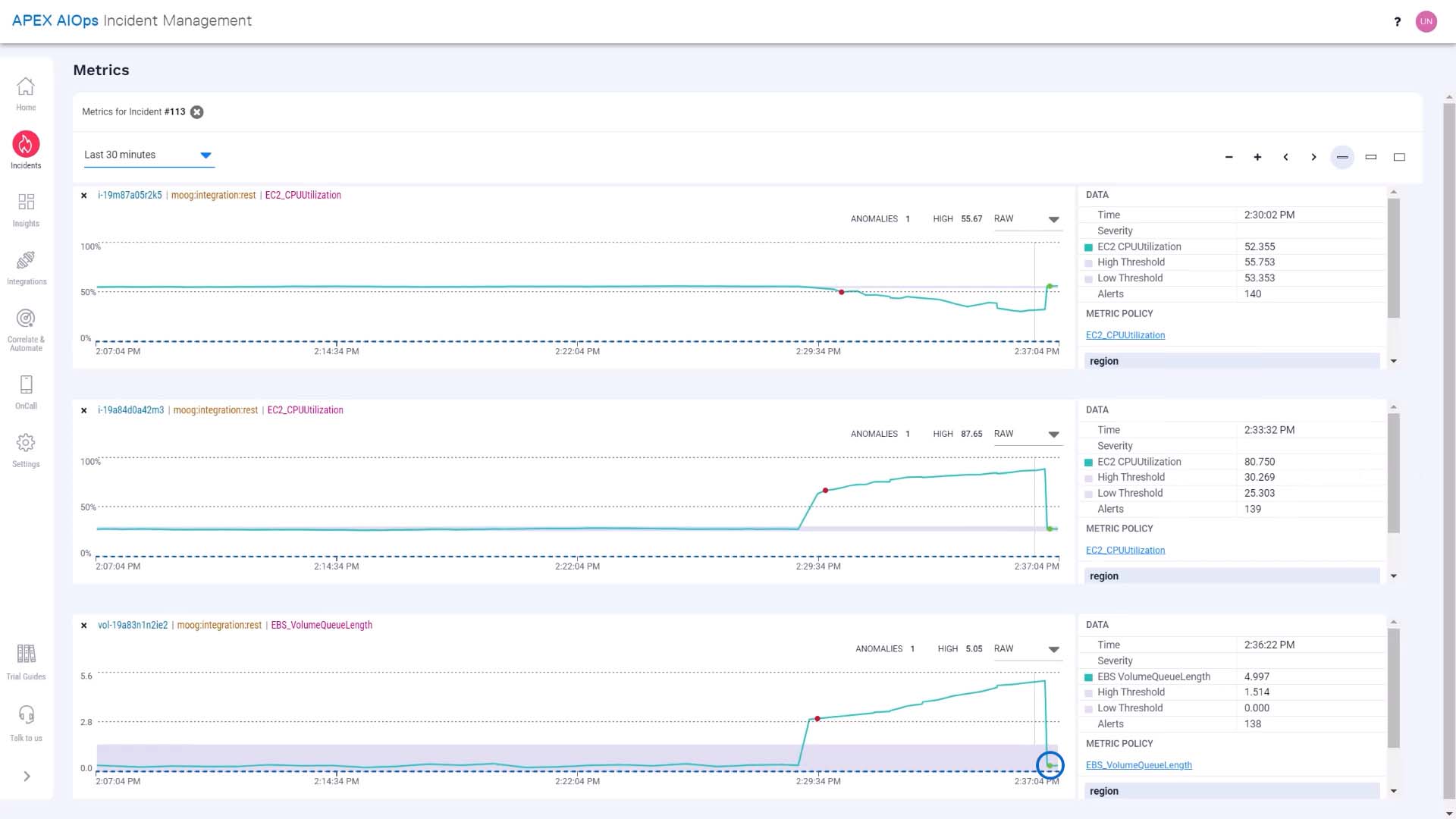

And the metric information of the alert is visually presented here.

Incident Management shows you the relevant context and their relationship to each other. This way, it’s much easier to grasp how the whole incident unfolded over time.

According to this, the volume queue length metric exceeded the threshold level and triggered a warning alert.

Then the CPU usage metric on our front end server increased and triggered a warning alert...

...the activity on the backend server fell...

...and we are seeing a backend connection error critical alert.

So, could this be the root cause that had a cascading effect to cause other alerts?

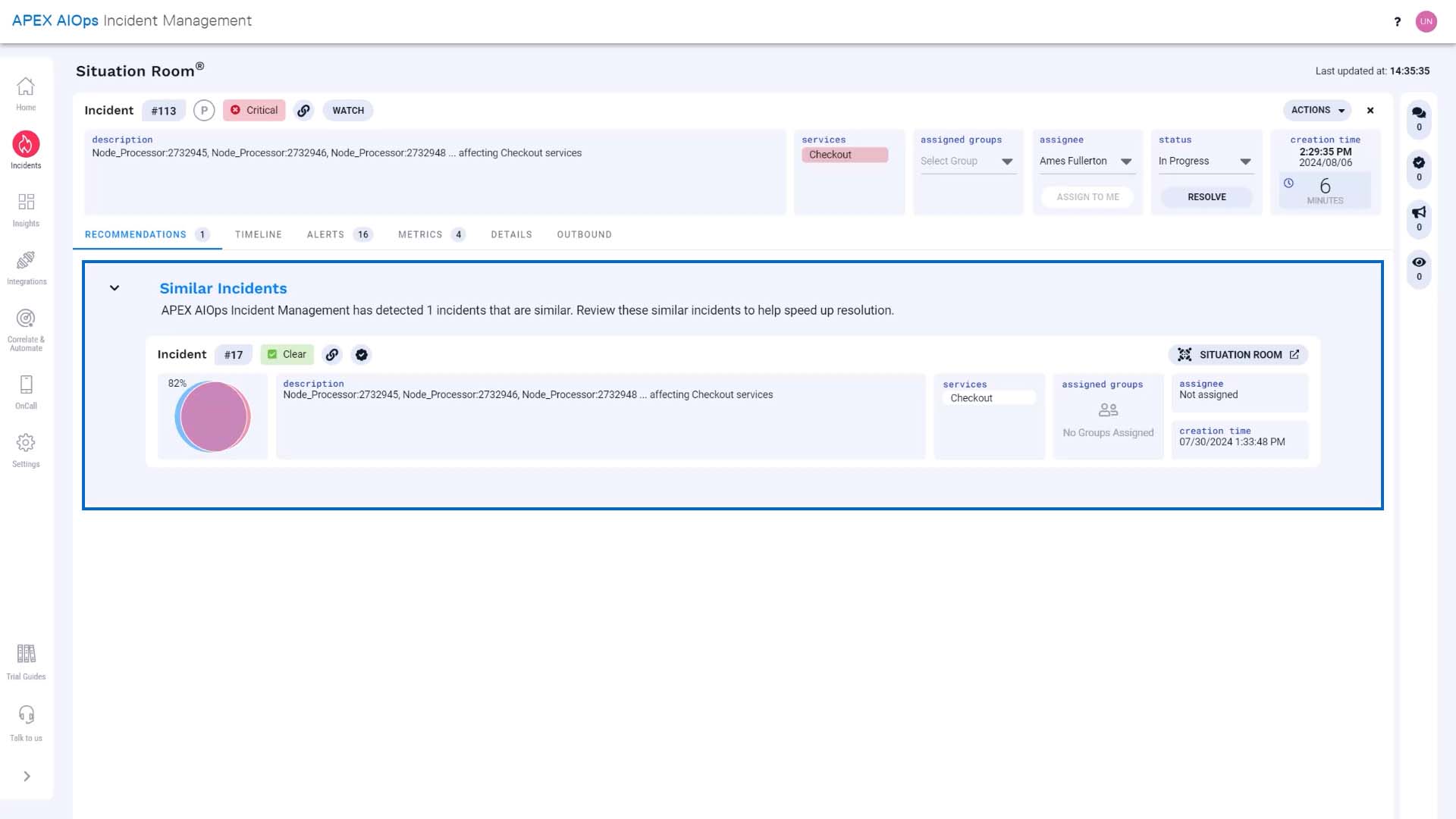

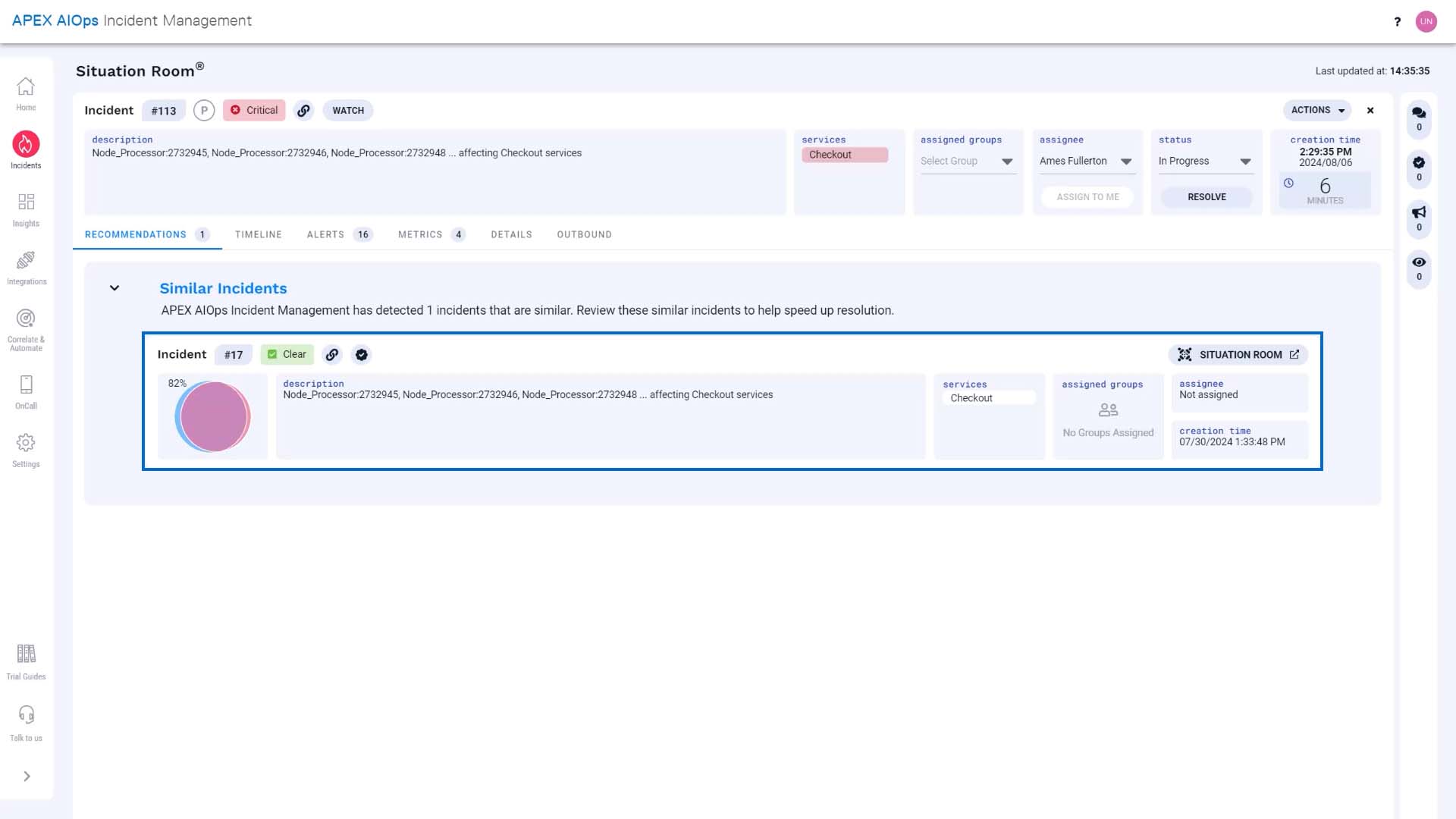

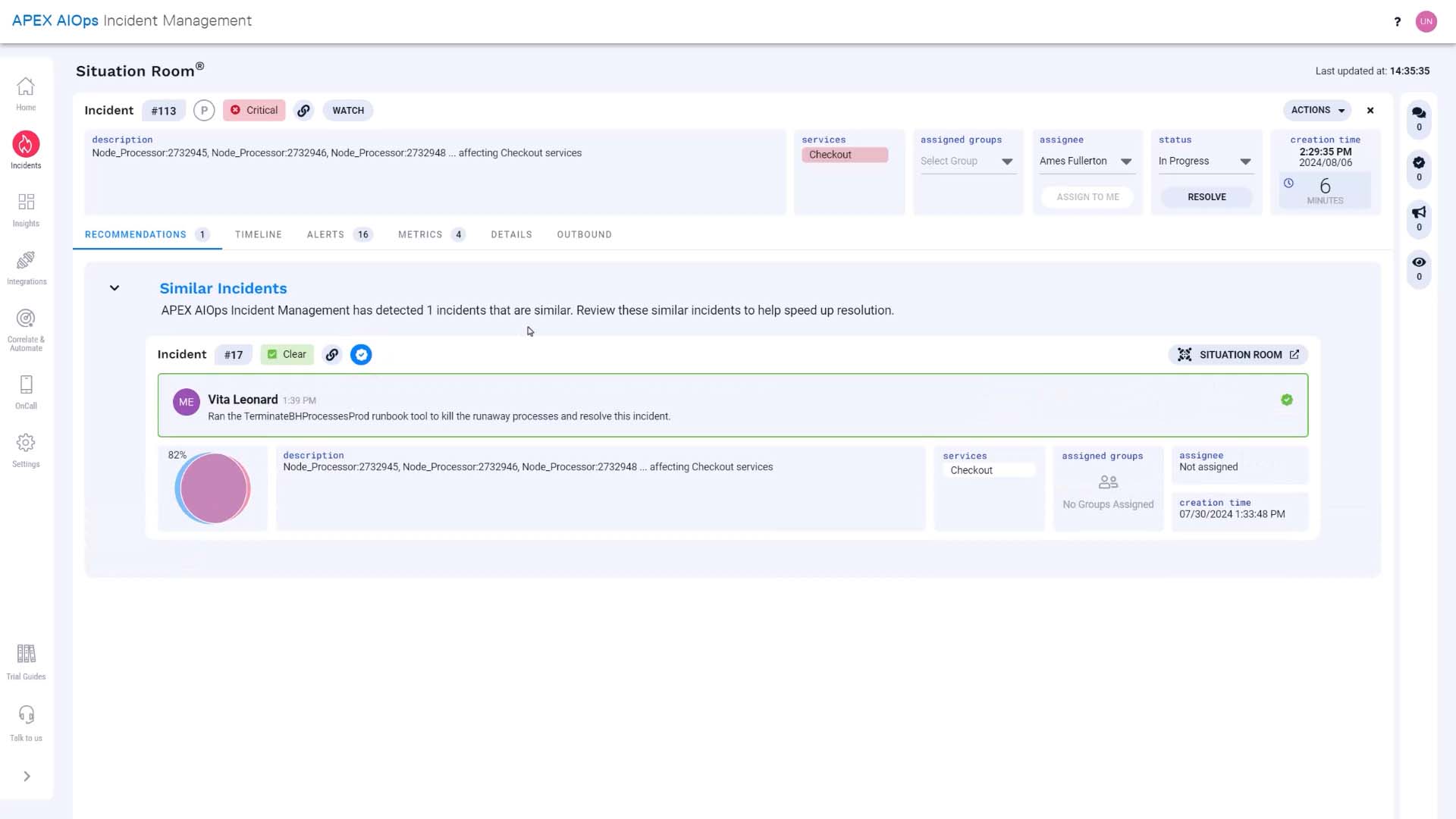

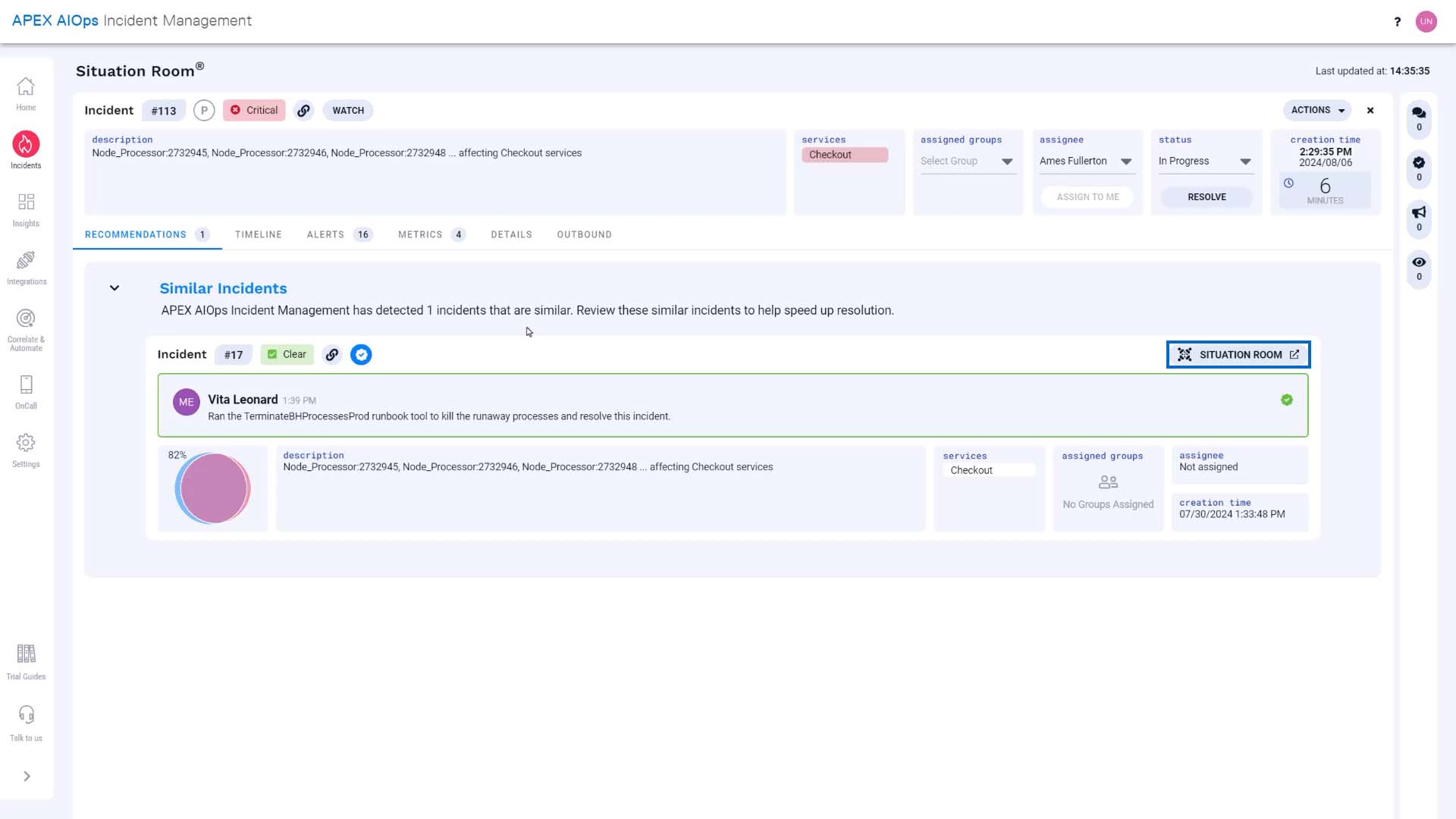

Let’s check out the recommendations tab in the Situation Room for additional insight. Any similar incidents from the past will be surfaced here.

Here's an incident that is 82% similar.

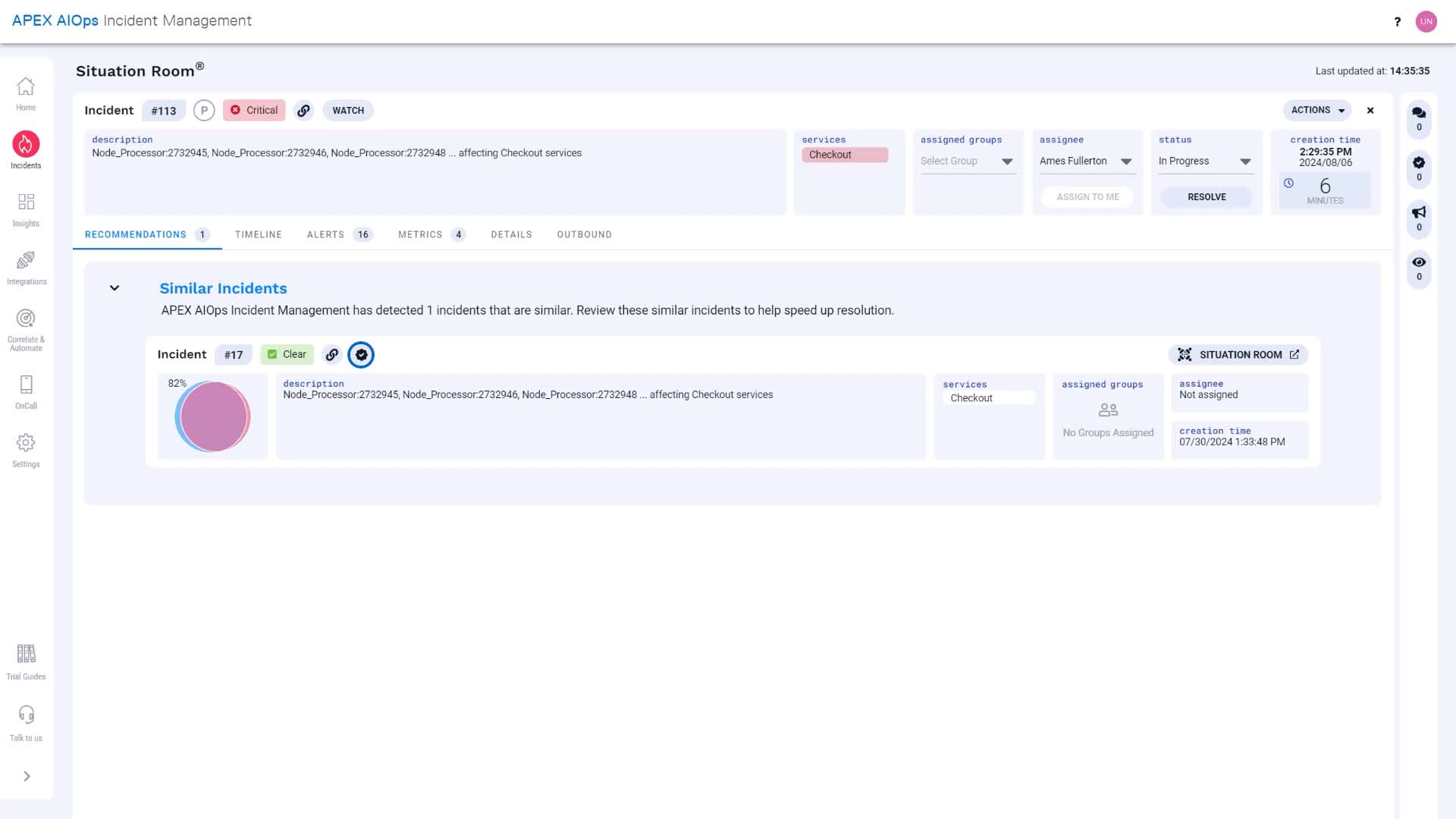

And this icon means it has a resolving step we can review. Great!

This indicates the similar incident was resolved using a runbook tool.

Let’s go to this incident to get more context and confirm we can resolve our incident the same way.

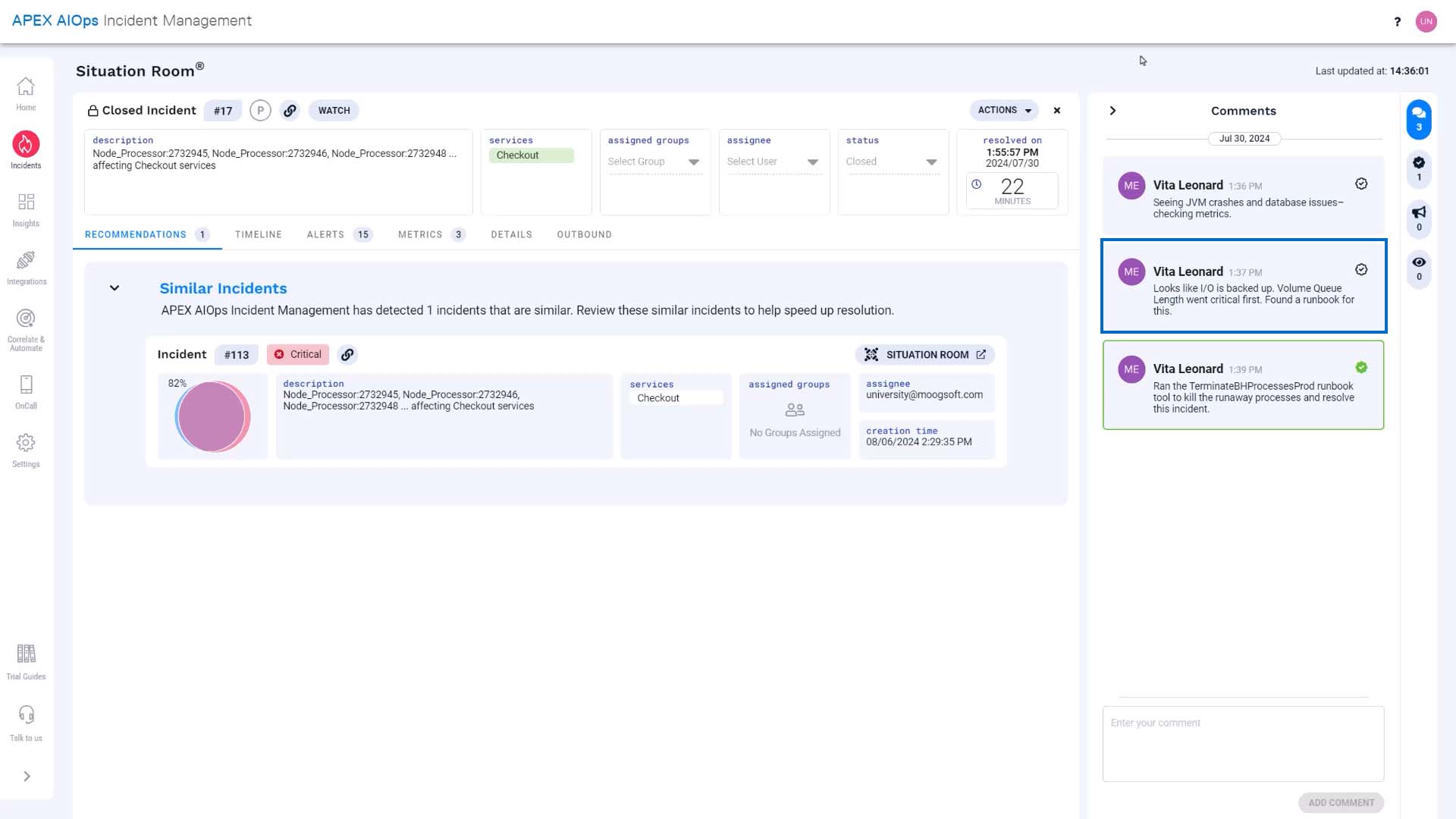

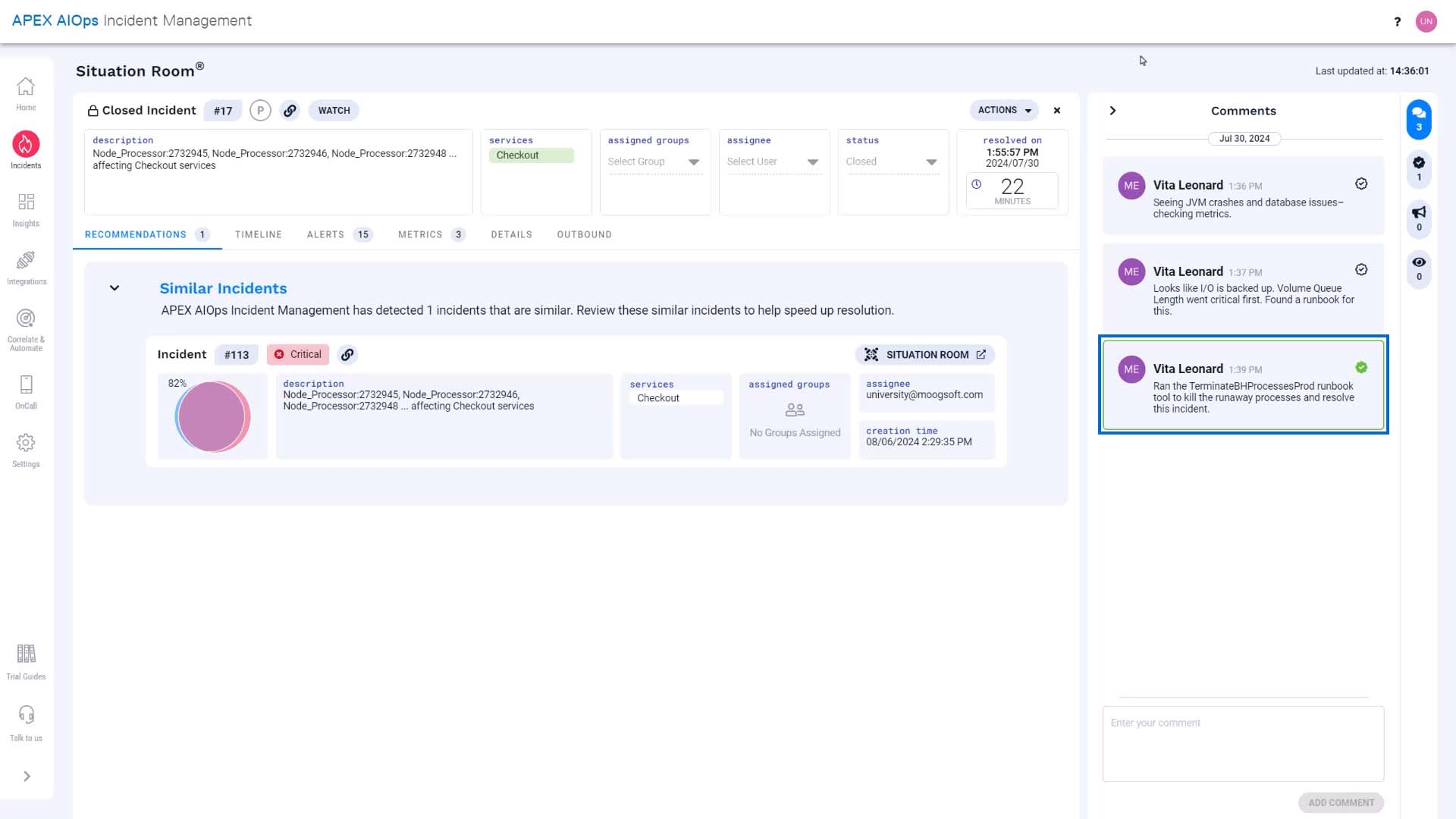

Let’s look at the comments. This incident involved a disk I/O bottleneck that showed up as an increase in Volume Queue Length. Just like our incident.

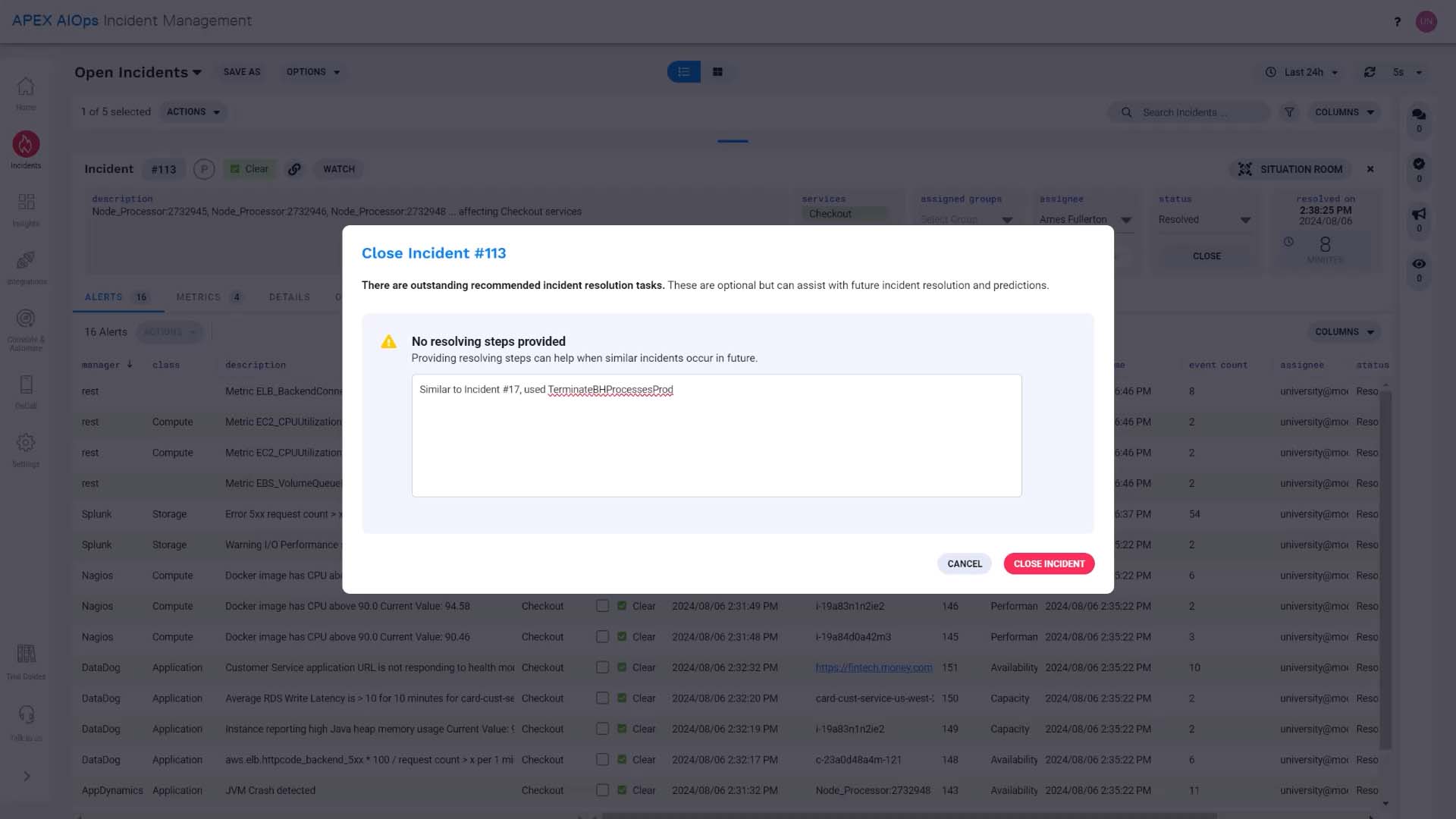

We can use the same runbook tool to terminate runaway processes and free up resources.

Let’s go back to our incident.

Currently the time window we are seeing is from the moment when the first alert in the incident occurred. We want to see what happens to the metrics when we run the tool. So let’s change the time frame. Now, the metrics are going to be updated in real time.

We’ve run the tool, and with the runaway processes that were overloading I/O killed, the CPU load on the front-end web server is back to normal...

...and activity has resumed on the back end server as well.

Nice! The anomaly has resolved and now the metrics are within the normal range previously learned by the system. Good job!

Now the alerts in our incident are all clear, as well as the incident itself.

The incident status has been changed to resolved. We'll document our solution, and the case is closed!

Just like that, we have resolved our first incident in Incident Management. Now it’s your turn to experience this workflow yourself!

Thanks for watching!