Alert correlation example

This example illustrates how APEX AIOps Incident Management clusters alerts into incidents with just a few pieces of high-level information.

Note

This example shows the correlation definition in isolation to illustrate basic principles. Note that correlation definitions are located within correlation groups. You can create multiple correlation groups, each with one or more correlation definitions, and there are other settings within correlation groups can also affect how alerts are clustered into incidents.

For the purposes of this example, imagine you are a DevOps engineer responsible for setting up Incident Management. You are using Amazon CloudWatch and Incident Management collectors to monitor your infrastructure. You access the Correlation feature and create a new correlation definition.

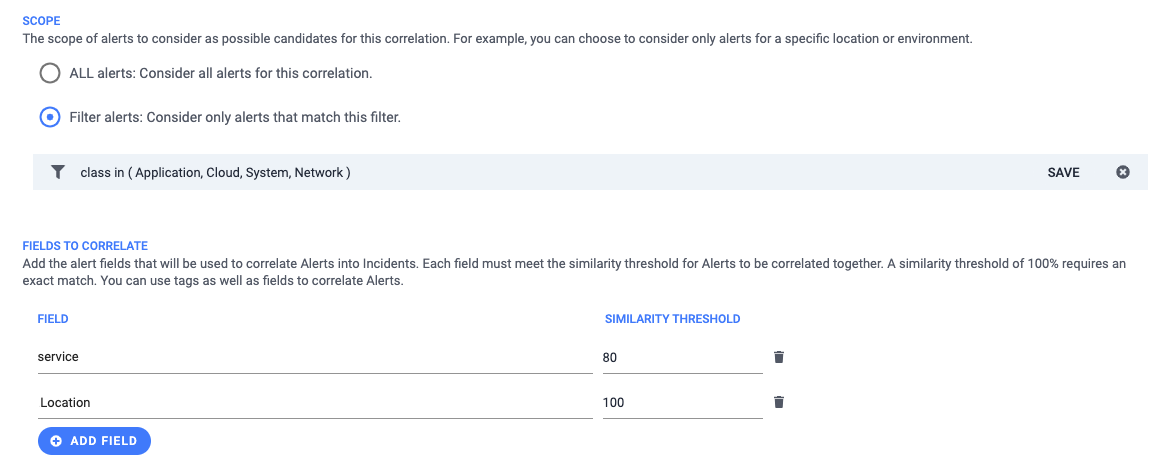

You want to cluster application, system, cloud, and network alerts for the same service in the same location. When prompted for the alert fields to correlate, you select the following:

tags.regionfield, with 75% similarity.The 75% similarity helps cluster alerts with slight variations on the same AWS region name.

servicefield, with 100% similarity.Because

serviceis a list, the similarity can only be set to 100%. This means that one or more values for theservicefield in an alert must exactly match one or more values in theservicefield of another alert (orservicesin incidents) to match that field.

With these settings, the correlation definition resembles the following:

|

You accept the defaults for the other options. You click Save and the correlation engine processes new alerts using your correlation definition:

Amazon CloudWatch sends an alarm to the Events API: inbound network traffic is high on cntnr23.

The alarm passes through the enrichment and deduplication pipelines.

The alarm arrives at the correlation engine as Alert 100 with

class= network,service= dbQuery_1.0, andtags.region= us-east-1.The network value for the

classfield matches your filter, so this alert is a candidate for correlation. The engine creates a new incident based on this alert. As yet there are no correlations with any other alerts.Alerts

Incidents

id = 100

class = network

source = cntnr23

service = dbQuery_1.0

tags.region = us-east-1

id = 1

classes = network

sources = cntnr23

services = dbQuery_1.0

tags.region = us-east-1

alerts = 100

Amazon CloudWatch flags an anomaly and sends it to the Events API: a spike in 4xx (bad requests) at a specific endpoint.

The anomaly passes through the pipeline and arrives at the correlation engine as Alert 101 with

class= API.The correlation scope does not include

class= API, so this alert is not a candidate for correlation.

An Incident Management collector flags an anomaly and sends it to the Metrics API: free memory is down on cntnr00.

The anomaly passes through the pipeline and arrives at the correlation engine as Alert 102 with

class= system,service= wsfe_1.0, andtags.region= us-west-1.The alert passes your correlation filter, so the engine creates a new incident. Again, there are no correlations based on your definition.

Alerts

Incidents

id = 100

class = network

source = cntnr23

service = dbQuery_1.0

tags.region = us-east-1

id = 1

classes = network

sources = cntnr23

services = dbQuery_1.0

tags.region = us-east-1

alerts = 100

id = 102

class = system

source = cntnr00

service = wsfe_1.0

tags.region = us-west-1

id = 2

classes = system

sources = cntnr00

services = wsfe_1.0

tags.region = us-west-1

alerts = 102

The anomaly detector flags another anomaly in the collector metrics: HTTP response times are spiking on cntnr01.

The anomaly arrives at the correlation engine as Alert 103 with

class= application,service= wsfe_1.0, andtags.region= us-west-1.The engine finds that Alerts 102 and 103 are correlated based on your definition: Their services match and both have a value of us-west-1 for

tags.region.The correlation engine adds Alert 103 to Incident 2.

Alerts

Incidents

id = 100

class = network

source = cntnr23

service = dbQuery_1.0

tags.region = us-east-1

id = 1

classes = network

sources = cntnr23

services = dbQuery_1.0

tags.region = us-east-1

alerts = 100

id = 102

class = system

source = cntnr00

service = wsfe_1.0

tags.region = us-west-1

id = 2

classes = system, application

sources = cntnr00, cntnr01

services = wsfe_1.0

tags.region = us-west-1

alerts = 102, 103

id = 103

class = application

source = cntnr01

service = wsfe_1.0

tags.region = us-west-1

The anomaly detector flags two CloudWatch metrics for another container: CPU utilization and disk write operations are significantly down on containers running the customer-login service.

Alert 104 arrives with

class= cloud,service= cust-login_1.2, andtags.region= us-east-2.This alert passes the filter but does not correlate with any open alerts. The engine creates Incident 3 with alert 104.

Alert 105 arrives with

class= cloud,service= cust-login_1.2, andtags.region= us-east2.The engine finds that alerts 104 and 105 are similar based on their nearly identical AWS region names and matching services. The engine adds alert 105 to incident 3.

Alerts

Incidents

id = 100

class = network

source = cntnr23

service = dbQuery_1.0

tags.region = us-east-1

id = 1

classes = network

sources = cntnr23

services = dbQuery_1.0

tags.region = us-east-1

alerts = 100

id = 102

class = system

source = cntnr00

service = wsfe_1.0

tags.region = us-west-1

id = 2

classes = system, application

sources = cntnr00

services = wsfe_1.0

tags.region = us-west-1

alerts = 102, 203

id = 103

class = application

source = cntnr01

service = wsfe_1.0

tags.region = us-west-1

id = 3

classes = cloud

sources = cntnr02, cntnr07

services = cust-login_1.2

tags.region = us-east-2, us-east2

alerts = 104, 105

id = 104

class = cloud

source = cntnr02

service = cust-login_1.2

tags.region = us-east-2

id = 105

class = cloud

source = cntnr07

service = cust-login_1.2

tags.region = us-east2

Note that if alert 105 had custlogin_1.2 as a value for

service, the alert would no longer match alert 104. Theservicefield is a list, and at least one value in the list must match exactly for the alerts to be considered a match for correlation. Also, because bothserviceandtags.regionare included in the correlation definition, the criteria must be met for both fields before two alerts can be clustered.An hour and a half goes by. Incident Management ingests more alerts and adds them to incidents based on your correlation definition. Another alert arrives from CloudWatch, noting that network traffic is still high on cntnr23.

The alarm arrives at the correlation engine as Alert 117 with

class= network,service= dbQuery_1.0, andtags.region= us-east-1.This alert matches your correlation filter. It also meets the

serviceandtags.regionrequirements with Alert 100 in incident 1.However, the correlation time window for incident 1 has expired. Each correlation definition has a configurable time window, which is set to 65 minutes by default.

The engine creates a new incident and adds alert 117 to it.

Alerts

Incidents

id = 100

class =

class = network

source = cntnr23

service = dbQuery_1.0

tags.region = us-east-1

id = 1

classes = network

sources = cntnr23

services = dbQuery_1.0

tags.region = us-east-1

alerts = 100

id = 102

class =

source = cntnr00

service = wsfe_1.0

tags.region = us-west-1

id = 2

classes = system, application

sources = cntnr00

services = wsfe_1.0

tags.region = us-west-1

alerts = 102, 203

id = 103

class = application

source = cntnr01

service = wsfe_1.0

tags.region = us-west-1

id = 3

classes = cloud

sources = cntnr02, cntnr07

services = cust-login_1.2

tags.region = us-east-2, us-east2

alerts = 104, 105

id = 104

class = cloud

source = cntnr02

service = cust-login_1.2

tags.region = us-east-2

id = 4

classes = network

sources = cntnr23

services = dbQuery_1.0

tags.region = us-east-1

alerts = 117

id = 105

class = cloud

source = cntnr07

service = cust-login_1.2

tags.region = us-east-2

id = 117

class = network

source = cntnr23

service = dbQuery_1.0

tags.region = us-east-1