Set Up the Redundancy Server Role

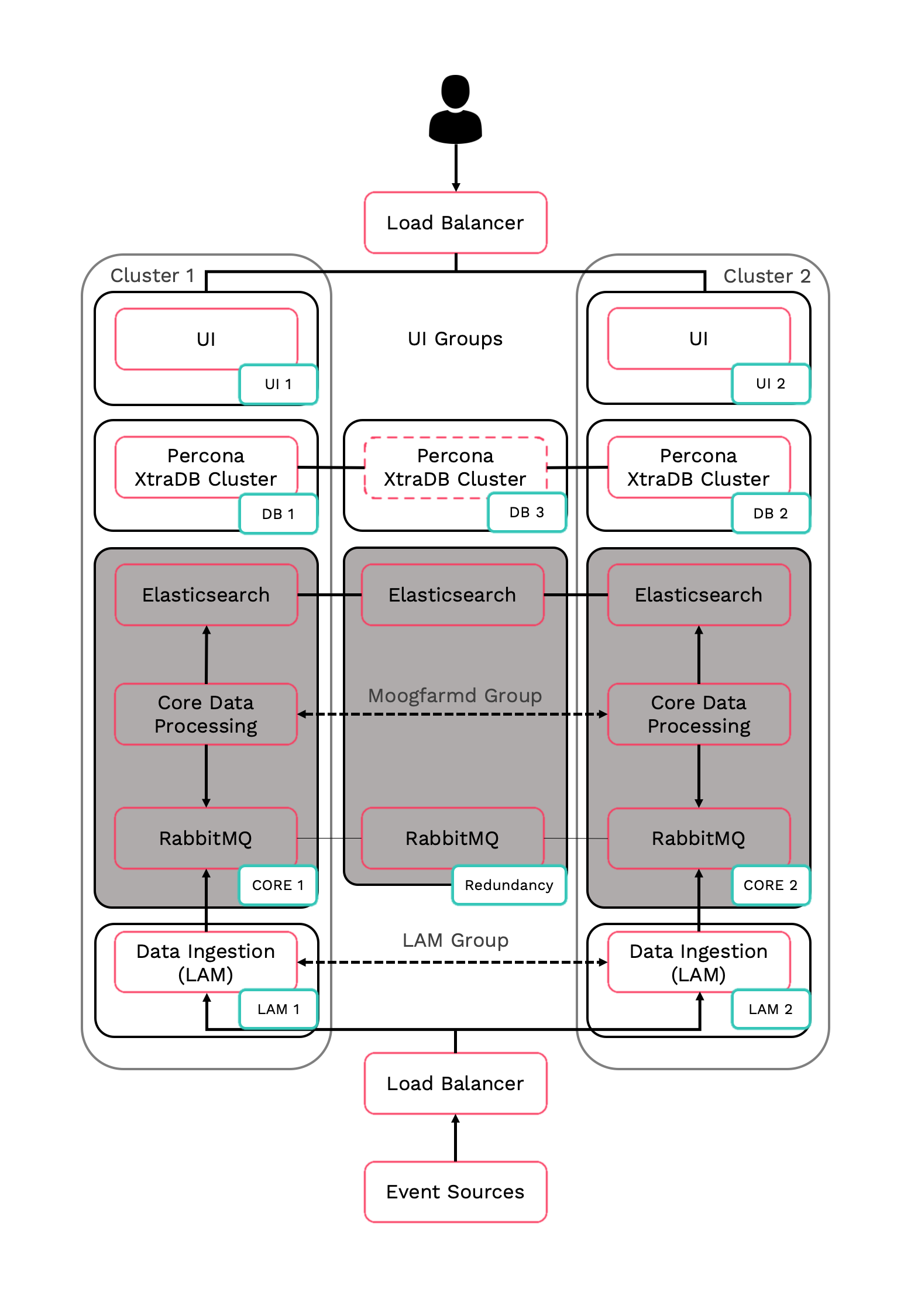

In Moogsoft AIOps HA architecture, both RabbitMQ and ElasticSearch run as three-node clusters. The three-node clusters prevent issues with ambiguous data state, such as a "split-brain".

RabbitMQ is the Message Bus used by Moogsoft AIOps. Elasticsearch delivers the search functionality.

The three nodes are distributed across the two Core roles and the redundancy server.

HA architecture

In our distributed HA installation, the RabbitMQ and Elasticsearch components are installed on the Core 1, Core 2 and Redundancy servers.

-

Core 1: RabbitMQ Node 1, Elasticsearch Node 1

-

Core 2: RabbitMQ Node 2, Elasticsearch Node 2

-

Redundancy server: RabbitMQ Node 3, Elasticsearch Node 3

Refer to the Distributed HA system Firewall for more information on connectivity within a fully distributed HA architecture.

Install Redundancy server

-

Install the Moogsoft AIOps components on the Redundancy server.

On the Redundancy server install the following Moogsoft AIOps components:

VERSION=7.3.1.1; yum -y install moogsoft-common-${VERSION} \ moogsoft-mooms-${VERSION} \ moogsoft-search-${VERSION} \ moogsoft-utils-${VERSION}Edit the

~/.bashrcfile to contain the following lines:export MOOGSOFT_HOME=/usr/share/moogsoft export APPSERVER_HOME=/usr/share/apache-tomcat export JAVA_HOME=/usr/java/latest export PATH=$PATH:$MOOGSOFT_HOME/bin:$MOOGSOFT_HOME/bin/utils

Source the

.bashrcfile:source ~/.bashrc

-

Initialize RabbitMQ cluster node 3 on the Redundancy server and join the cluster.

-

On the Redundancy server initialise RabbitMQ. Use the same zone name as Core 1 and Core 2:

moog_init_mooms.sh -pz <zone>

-

The erlang cookies must be the same for all RabbitMQ nodes. Replace the erlang cookie on the Redundancy server with the Core 1 erlang cookie located at

/var/lib/rabbitmq/.erlang.cookie. Make the Redundancy server cookie read-only:chmod 400 /var/lib/rabbitmq/.erlang.cookie

You may need to change the file permissions on the Redundancy server erlang cookie first to allow this file to be overwritten. For example:

chmod 406 /var/lib/rabbitmq/.erlang.cookie

-

Restart the

rabbitmq-serverservice and join the cluster. Substitute the Core 1 server short hostname:systemctl restart rabbitmq-server rabbitmqctl stop_app rabbitmqctl join_cluster rabbit@<Core 1 server short hostname> rabbitmqctl start_app

The short hostname is the full hostname excluding the DNS domain name. For example, if the hostname is

ip-172-31-82-78.ec2.internal, the short hostname isip-172-31-82-78. To find out the short hostname, runrabbitmqctl cluster_statuson Core 1. -

Apply the HA mirrored queues policy. Use the same zone name as Core 1:

rabbitmqctl set_policy -p <zone> ha-all ".+\.HA" '{"ha-mode":"all"}' -

Run

rabbitmqctl cluster_statusto verify the cluster status and queue policy. Example output is as followsCluster status of node rabbit@ldev02 ... [{nodes,[{disc,[rabbit@ldev01,rabbit@ldev02]}]}, {running_nodes,[rabbit@ldev01,rabbit@ldev02]}, {cluster_name,<<"rabbit@ldev02">>}, {partitions,[]}, {alarms,[{rabbit@ldev01,[]},{rabbit@ldev02,[]}]}] [root@ldev02 rabbitmq]# rabbitmqctl -p MOOG list_policies Listing policies for vhost "MOOG" ... MOOG ha-all .+\.HA all {"ha-mode":"all"} 0

-

-

Initialise, configure and start Elasticsearch cluster node 3 on the Redundancy server.

-

Initialize Elasticsearch on the Redundancy server:

moog_init_search.sh

-

Uncomment and edit the properties of the

/etc/elasticsearch/elasticsearch.ymlfile on the Redundancy server as follows:cluster.name: aiops node.name: <Redundancy server hostname> ... network.host: 0.0.0.0 http.port: 9200 discovery.zen.ping.unicast.hosts: [ "<Core 1 hostname>","<Core 2 hostname>","<Redundancy server hostname>"] discovery.zen.minimum_master_nodes: 1 gateway.recover_after_nodes: 1 node.master: true

-

Restart Elasticsearch on the Core 1, Core 2 and Redundancy servers:

systemctl restart elasticsearch

-

-

Verify that the Elasticsearch nodes are working correctly:

curl -X GET "localhost:9200/_cat/health?v&pretty"

Example cluster health status:

epoch timestamp cluster status node.total node.data shards pri relo init unassign pending_tasks max_task_wait_time active_shards_percent 1580490422 17:07:02 aiops green 3 3 0 0 0 0 0 0 - 100.0%